Humans and most other animals are known to be strongly driven by expected rewards or adverse consequences. The process of acquiring new skills or adjusting behaviors in response to positive outcomes is known as reinforcement learning (RL).

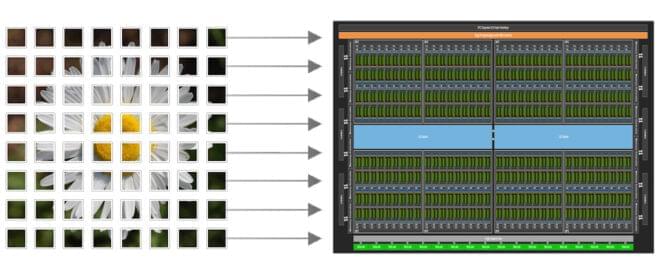

RL has been widely studied over the past decades and has even been adapted to train some computational models, such as some deep learning algorithms. Existing models of RL suggest that this type of learning is linked to dopaminergic pathways (i.e., neural pathways that respond to differences between expected and experienced outcomes).

Anne G. E. Collins, a researcher at University of California, Berkeley, recently developed a new model of RL specific to situations in which people’s choices have uncertain context-dependent outcomes, and they try to learn the actions that will lead to rewards. Her paper, published in Nature Human Behaviour, challenges the assumption that existing RL algorithms faithfully mirror psychological and neural mechanisms.