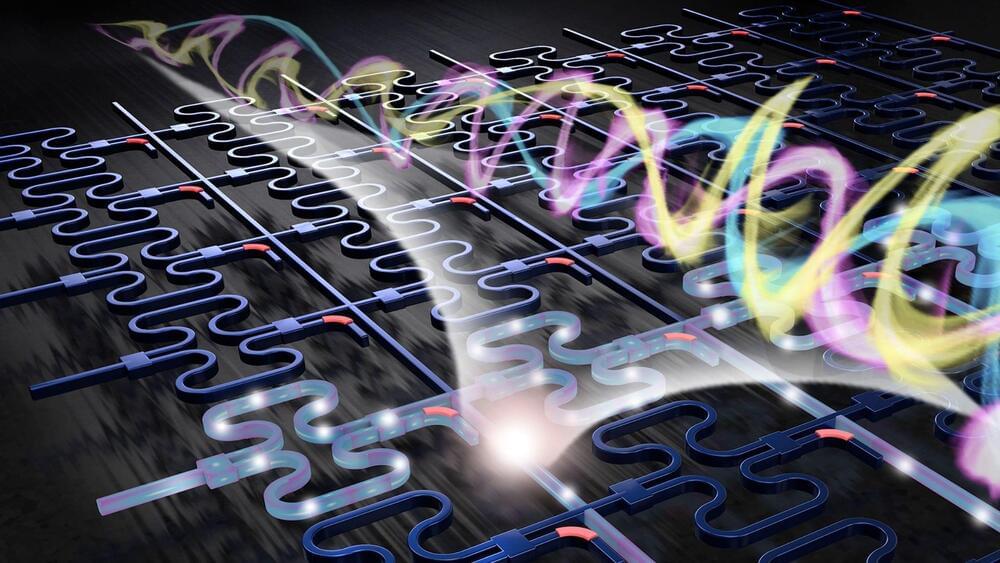

The team estimates that their hardware can outperform the best electronic processors by a factor of 100 in terms of energy efficiency and compute density.

A team of scientists from Oxford University and their partners from Germany and the UK have developed a new kind of AI hardware that uses light to process three-dimensional (3D) data. Based on integrated photonic-electronic chips, the hardware can perform complex calculations in parallel using different wavelengths and radio frequencies of light. The team claims their hardware can boost the data processing speed and efficiency for AI tasks by several orders of magnitude.

AI computing and processing power

The research published today in the journal Nature Photonics addresses the challenge of meeting modern AI applications’ increasing demand for computing power. The conventional computer chips, which rely on electronics, need help to keep up with the pace of AI innovation, which requires doubling the processing power every 3.5 months. The team says that using light instead of electronics offers a new way of computing that can overcome this bottleneck.