It’s only a matter of time before AI can achieve AGI, or “artificial general intelligence,” John Carmack said, which would be a massive breakthrough.

The power to turn invisible, which has long been a hallmark of science fiction and fantasy, would be a revolutionary technical breakthrough. Check out how scientists are making an invisibility cloak into reality.

Despite decades of innovation in fabrics with high-tech thermal properties that keep marathon runners cool or alpine hikers warm, there has never been a material that changes its insulating properties in response to the environment. Until now.

University of Maryland researchers have created a fabric that can automatically regulate the amount of heat that passes through it. When conditions are warm and moist, such as those near a sweating body, the fabric allows infrared radiation (heat) to pass through. When conditions become cooler and drier, the fabric reduces the heat that escapes. The development was reported in the February 8, 2019 issue of the journal Science.

The researchers created the fabric from specially engineered yarn coated with a conductive metal. Under hot, humid conditions, the strands of yarn compact and activate the coating, which changes the way the fabric interacts with infrared radiation. They refer to the action as “gating” of infrared radiation, which acts as a tunable blind to transmit or block heat.

Google is rushing to take part in the sudden fervor for conversational AI, driven by the pervasive success of rival OpenAI’s ChatGPT. Bard, the company’s new AI experiment, aims to “combine the breadth of the world’s knowledge with the power, intelligence, and creativity of our large language models.” Not short on ambition, Google!

The model, or service, or AI chatbot, however you wish to describe it, was announced in a blog post by CEO Sundar Pichai. He pointedly notes Google’s recentering around AI some years back, as well as the fact that the most influential concept (the Transformer) was created by the company’s researchers in 2017.

“It’s a really exciting time to be working on these technologies as we translate deep research and breakthroughs into products that truly help people,” Pichai writes. It’s hard not to wonder while reading this how Google managed to get leapfrogged so decisively by OpenAI, the latter of which is now synonymous with the technologies the former pioneered.

An innovative calculation provides a better way to think about our solar system.

According to scientific reports, this study relies on studying the DNA of the bird known as “Dodo”, which lived on the island of Mauritius in the middle of the Indian Ocean until the late seventeenth century, and was unable to fly. It may seem like a fantasy, because the animal has been extinct for centuries, but scientists make it clear that their quest is based on very carefully studied steps.

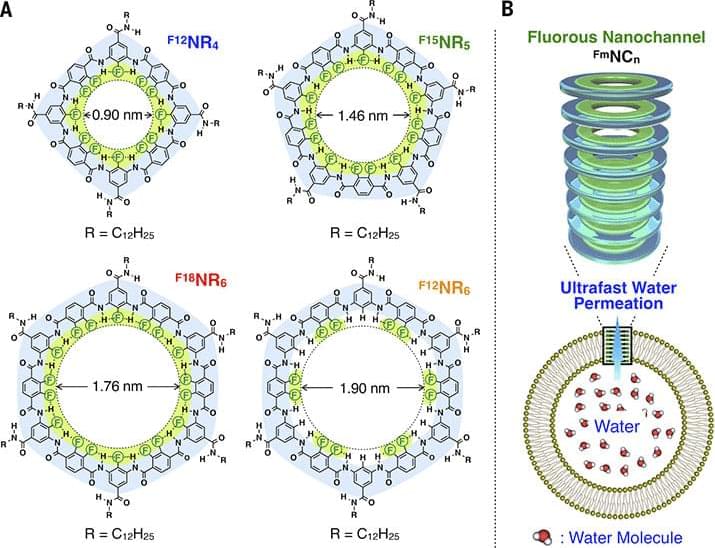

The key innovation in this new desalination technology is fluorine, a hydrophobic element that that’s long been prized for its desire to be left alone. It’s no accident that fluorine is a key ingredient in Teflon, which is used on non-stick pans to keep fried eggs from sticking and inside pipes to make fluids flow more efficiently. At the nanoscopic level, fluorine repels negatively charged ions, including the chlorine in salt (NaCl). Its electric properties also break down clumps of water molecules that can keep the liquid from flowing as freely as possible. -(IE)

-Desalination is something people need to consider with rising sea levels and changing weather patterns, like drought.

Oligoamide nanoring-based fluorous nanochannels in bilayer membranes enable ultrafast water permeation and desalination.

Yann LeCun, Meta’s chief artificial intelligence (AI) scientist shared his thoughts on ChatGPT.

ChatGPT has been making headlines worldwide, but not all are impressed. Yann LeCun, Meta’s chief artificial intelligence (AI) scientist, had some harsh words for the program in an hour-and-a-half talk hosted by the Collective Forecast. This online, interactive discussion series is organized by Collective.

“In terms of underlying techniques, ChatGPT is not particularly innovative,” said LeCun on Zoom last week.

NurPhoto/Getty Images.

What exactly did he have to say? ZDNET attended the session and reported on it.