OpenAI o3 scores 75.7% on ARC-AGI public leaderboard.

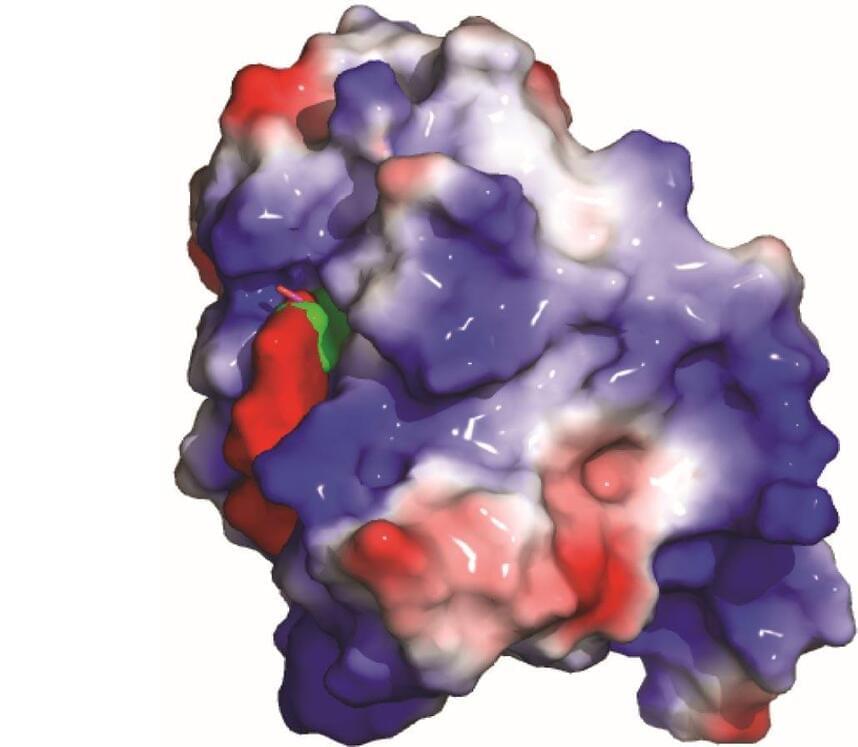

A breakthrough in understanding how a single-cell parasite makes ergosterol (its version of cholesterol) could lead to more effective drugs for human leishmaniasis, a parasitic disease that afflicts about 1 million people and kills about 30,000 people around the world every year.

The findings, reported in Nature Communications, also solve a decades-long scientific puzzle that’s prevented drugmakers from successfully using azole antifungal drugs to treat visceral leishmaniasis, or VL.

About 30 years ago, scientists discovered the two species of single-cell parasites that cause VL, Leishmania donovani and Leishmania infantum, made the same lipid sterol, called ergosterol, as fungi proven susceptible to azoles antifungals. These azoles antifungals target a crucial enzyme for sterol biosynthesis, called CYP51.

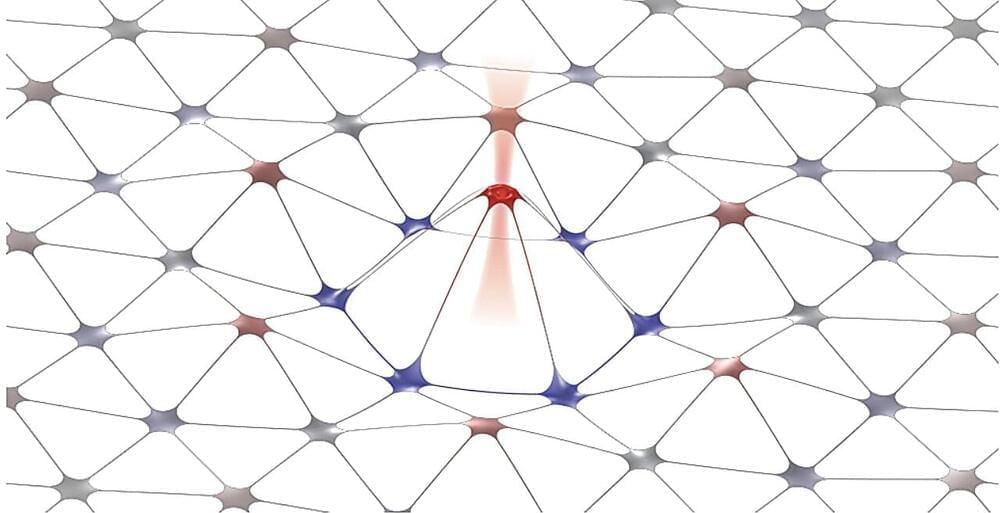

Mechanical crystals, also known as phononic crystals, are materials that can control the propagation of vibrations or sound waves, just like photonic crystals control the flow of light. The introduction of defects in these crystals (i.e., intentional disruptions in their periodic structure) can give rise to mechanical modes within the band gap, enabling the confinement of mechanical waves to smaller regions or the materials—a feature that could be leveraged to create new technologies.

Researchers at McGill University recently realized a new mechanical crystal with an optically programmable defect mode. Their paper, published in Physical Review Letters, introduces a new approach to dynamically reprogram mechanical systems, which entails the use of an optical spring to transfer a mechanical mode into a crystal’s band gap.

“Some time ago, our group was thinking a lot about using an optical spring to partially levitate structures and improve their performance,” Jack C. Sankey, principal investigator and co-author of the paper, told Phys.org. “At the same time, we were watching the amazing breakthroughs in our field with mechanical devices that used the band gap of a phononic crystal to insulate mechanical systems from the noisy environment.”

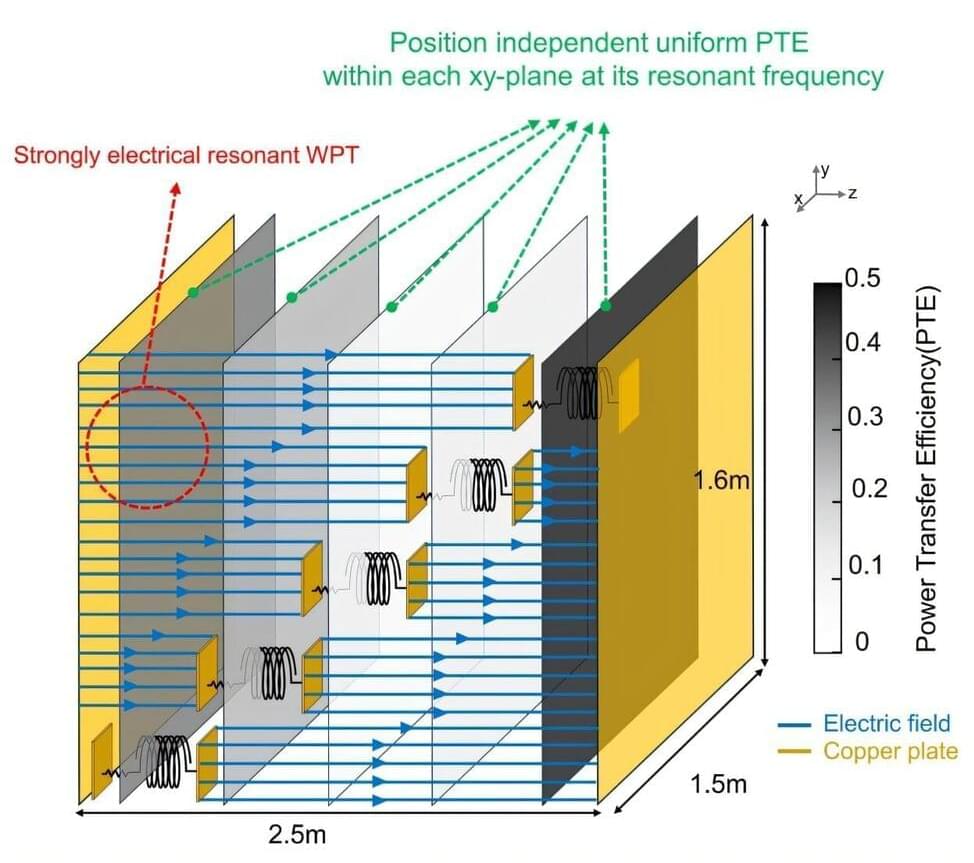

A groundbreaking advancement in technology is paving the way for mobile phones and other electronic devices to recharge simply by being kept in a pocket. This innovative system enables wireless charging throughout three-dimensional (3D) spaces, encompassing walls, floors, and air.

On December 12, Professor Franklin Bien and his research team in the Department of Electrical Engineering at UNIST announced the creation of a revolutionary electric resonance-based wireless power transfer (ERWPT) system, marking a significant milestone in the field. This modern technology allows devices to charge virtually anywhere within a 3D environment, addressing the longstanding challenges associated with traditional magnetic resonance wireless power transfer (MRWPT) and offering a robust solution that enables efficient power transmission without the constraints of precise device positioning.

The paper is published in the journal Advanced Science.

Scientists at Neuro-Electronics Research Flanders (NERF), under the direction of Prof. Vincent Bonin, have released two innovative studies that provide fresh perspectives on the processing and distribution of visual information in the brain. These studies contest conventional beliefs regarding the straightforwardness of visual processing, instead emphasizing the intricate and adaptable nature of how the brain understands sensory information.

Scientists are developing nuclear clocks using thin films of thorium tetrafluoride, which could revolutionize precision timekeeping by being less radioactive and more cost-effective than previous models.

This new technology, pioneered by a collaborative research team, enables more accessible and scalable nuclear clocks that may soon move beyond laboratory settings into practical applications like telecommunications and navigation.

Breakthrough in Nuclear Clock Technology.

Scientists have discovered a key protein that helps cancer cells avoid detection by the immune system during a type of advanced therapy.

By creating a new drug that blocks this protein, researchers hope to make cancer treatments more effective, especially for hard-to-treat blood cancers. This breakthrough could lead to better survival rates and fewer relapses for patients.

Scientists at City of Hope, one of the leading cancer research and treatment centers in the U.S., have uncovered a key factor that allows cancer cells to evade CAR T cell therapy.

Researchers at NASAs Armstrong Center are advancing an atmospheric probe for potential space missions.

Utilizing innovative designs based on past aircraft research, the team has successfully tested the probe, planning further improvements to increase its functionality and data-gathering capabilities.

NASA’s Innovative Atmospheric Probe

The field of artificial intelligence (AI) has witnessed extraordinary advancements in recent years, ranging from natural language processing breakthroughs to the development of sophisticated robotics. Among these innovations, multi-agent systems (MAS) have emerged as a transformative approach for solving problems that single agents struggle to address. Multi-agent collaboration harnesses the power of interactions between autonomous entities, or “agents,” to achieve shared or individual objectives. In this article, we explore one specific and impactful technique within multi-agent collaboration: role-based collaboration enhanced by prompt engineering. This approach has proven particularly effective in practical applications, such as developing a software application.

A China-based firm has launched a novel energy storage device that tackles the 18650-battery power challenge. Introduced by Ampace, the latest JP30 cylindrical lithium battery is claimed to be capable of delivering breakthrough performance in a compact form.

Themed “Working Non-stop, compact and more powerful”, the new battery is the latest addition to the JP series.

Despite having a compact and sleek design in appearance, the battery offers ultra-high power performance.