The quest for perfect connectivity has taken a giant leap forward.

If you use the web for more than just browsing (that’s pretty much everyone), chances are you’ve had your fair share of “CAPTCHA rage,” the frustration stemming from trying to discern a marginally legible string of letters aimed at verifying that you are a human. CAPTCHA, which stands for “Completely Automated Public Turing test to tell Computers and Humans Apart,” was introduced to the Internet a decade ago and has seen widespread adoption in various forms — whether using letters, sounds, math equations, or images — even as complaints about their use continue.

A large-scale Stanford study a few years ago concluded that “CAPTCHAs are often difficult for humans.” It has also been reported that around 1 in 5 visitors will leave a website rather than complete a CAPTCHA.

A longstanding belief is that the inconvenience of using CAPTCHAs is the price we all pay for having secured websites. But there’s no escaping that CAPTCHAs are becoming harder for humans and easier for artificial intelligence programs to solve.

From a new AI challenger to Google search to an nearly indestructible robot hand, check out this week’s awesome tech stories from around the web.

A quantum internet would essentially be unhackable. In the future, sensitive information—financial or national security data, for instance, as opposed to memes and cat pictures—would travel through such a network in parallel to a more traditional internet.

Of course, building and scaling systems for quantum communications is no easy task. Scientists have been steadily chipping away at the problem for years. A Harvard team recently took another noteworthy step in the right direction. In a paper published this week in Nature, the team says they’ve sent entangled photons between two quantum memory nodes 22 miles (35 kilometers) apart on existing fiber optic infrastructure under the busy streets of Boston.

“Showing that quantum network nodes can be entangled in the real-world environment of a very busy urban area is an important step toward practical networking between quantum computers,” Mikhail Lukin, who led the project and is a physics professor at Harvard, said in a press release.

Researchers aiming to create a secure quantum version of the internet need a device called a quantum repeater, which doesn’t yet exist — but now two teams say they are well on the way to building one.

By Alex Wilkins

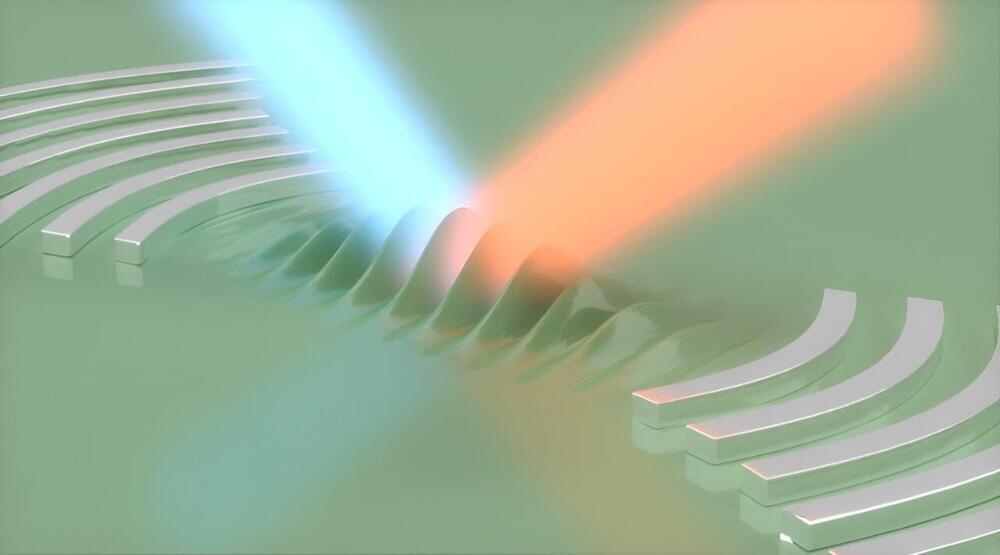

In a first for communications, researchers in Sweden 3D printed silica glass micro-optics on the tips of optic fibers—surfaces as small as the cross section of a human hair. The advance could enable faster internet and improved connectivity, as well as innovations like smaller sensors and imaging systems.

“Big machine learning models have to consume lots of power to crunch data and come out with the right parameters, whereas our model and training is so extremely simple that you could have systems learning on the fly,” said Robert Kent.

How can machine learning be improved to provide better efficiency in the future? This is what a recent study published in Nature Communications hopes to address as a team of researchers from The Ohio State University investigated the potential for controlling future machine learning products by creating digital twins (copies) that can be used to improve machine learning-based controllers that are currently being used in self-driving cars. However, these controllers require large amounts of computing power and are often challenging to use. This study holds the potential to help researchers better understand how future machine learning algorithms can exhibit better control and efficiency, thus improving their products.

“The problem with most machine learning-based controllers is that they use a lot of energy or power, and they take a long time to evaluate,” said Robert Kent, who is a graduate student in the Department of Physics at The Ohio State University and lead author of the study. “Developing traditional controllers for them has also been difficult because chaotic systems are extremely sensitive to small changes.”

For the study, the researchers created a fingertip-sized digital twin that can function without the internet with the goal of improving the productivity and capabilities of a machine learning-based controller. In the end, the researchers discovered a decrease in the controller’s power needs due to a machine learning method known as reservoir computing, which involves reading in data and mapping out to the target location. According to the researchers, this new method can be used to simplify complex systems, including self-driving cars while decreasing the amount of power and energy required to run the system.