Most people envision vibration on a large scale, like the buzz of a cell phone notification or the oscillation of an electric toothbrush. But scientists think about vibration on a smaller scale—atomic, even.

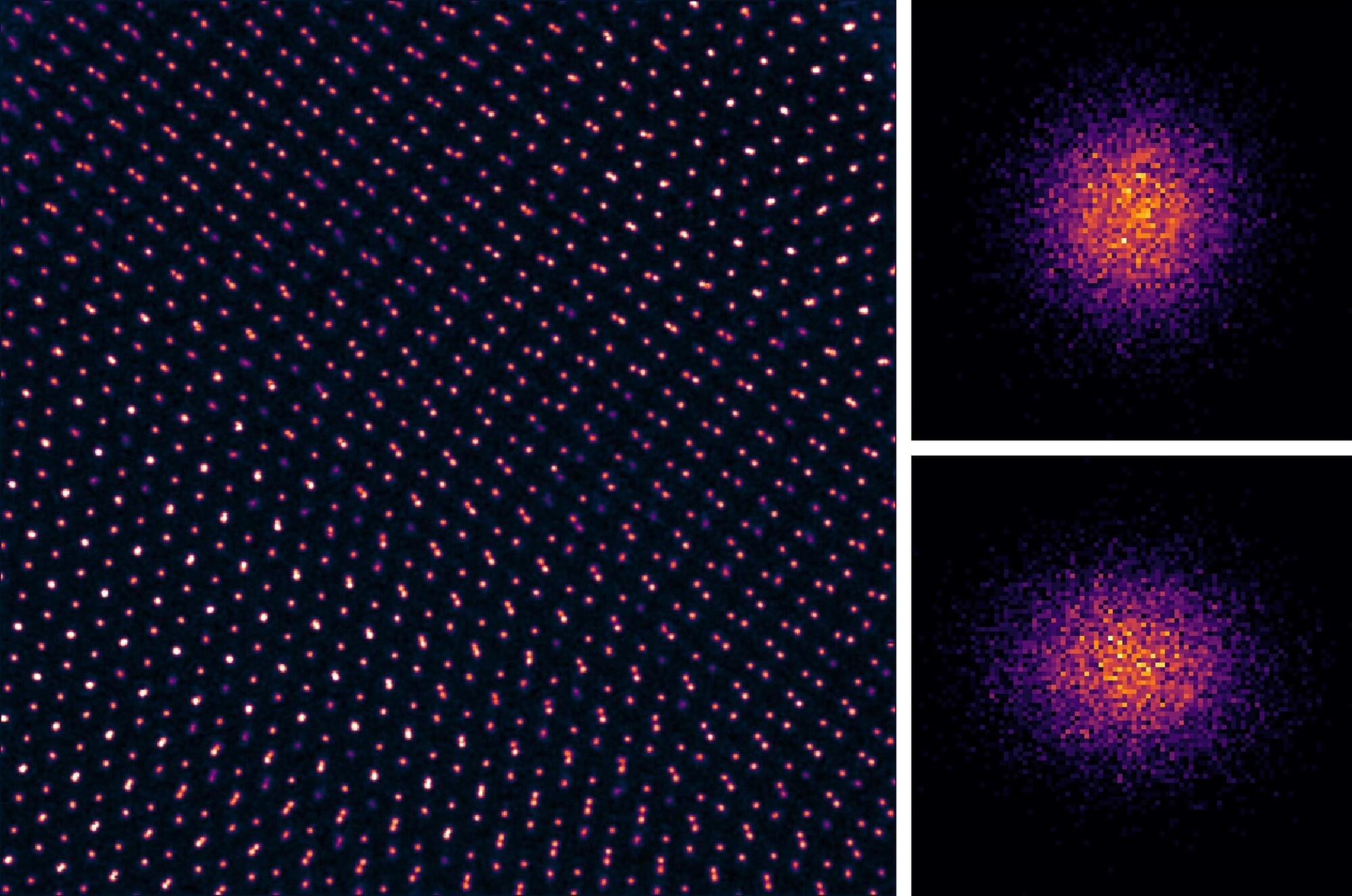

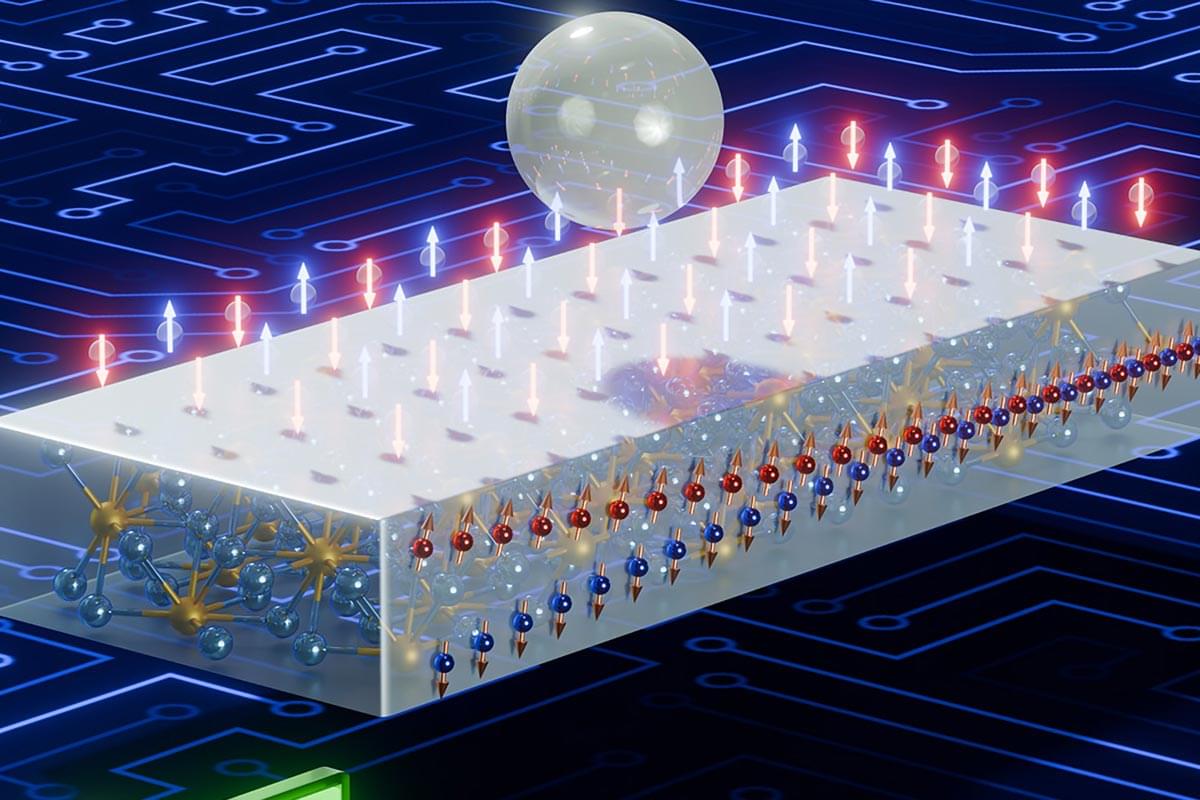

In a first for the field, researchers from The Grainger College of Engineering at the University of Illinois at Urbana-Champaign have used advanced imaging technology to directly observe a previously hidden branch of vibrational physics in 2D materials. Their findings, published in Science, confirm the existence of a previously unseen class of vibrational modes and present the highest resolution images ever taken of a single atom.

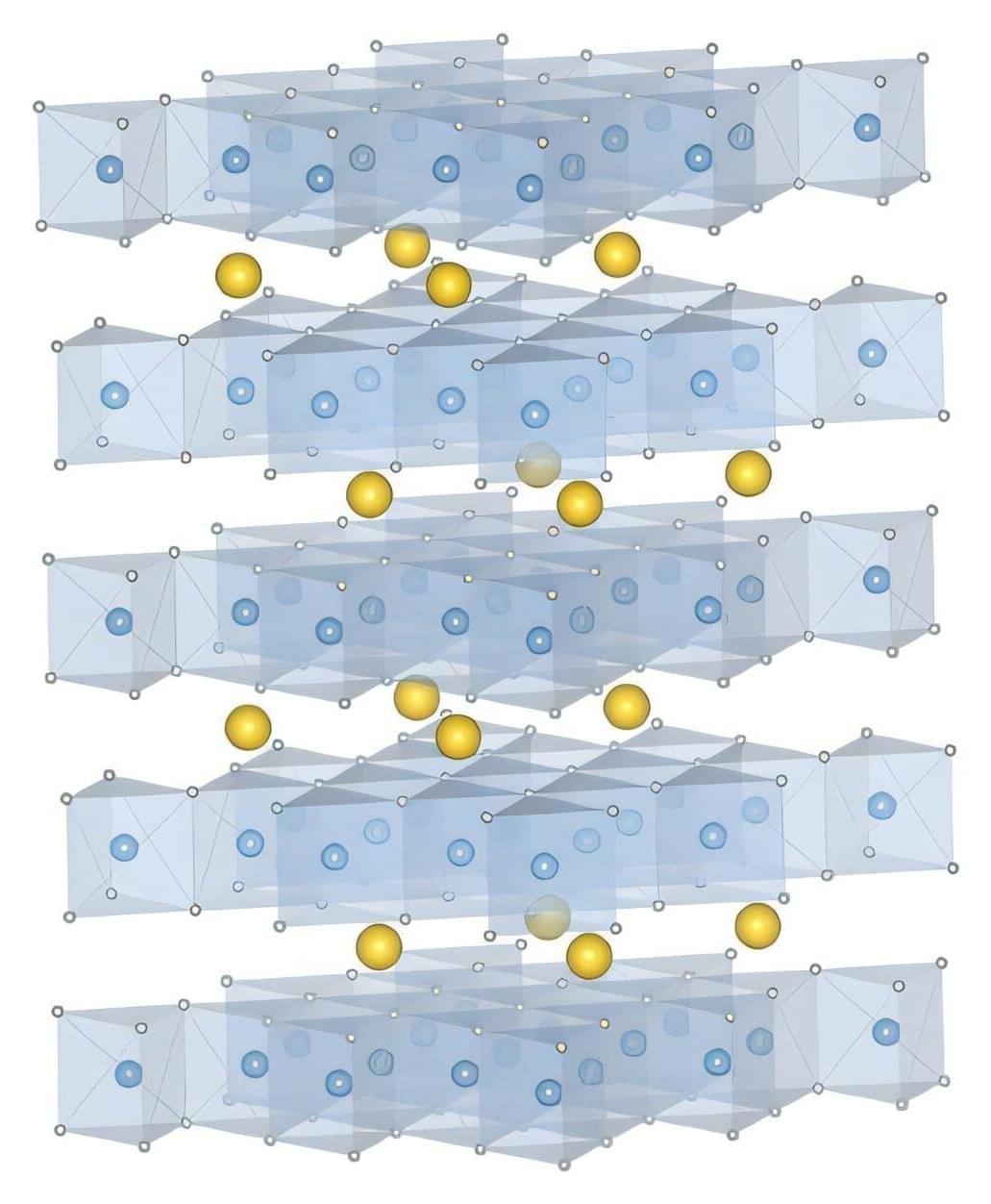

Two-dimensional materials are a promising candidate for next-generation electronics because they can be scaled down in size to thicknesses of just a few atoms while maintaining desirable electronic properties. A route to these new electronic devices lies at the atomic level, by creating so-called Moiré systems—stacks of 2D materials whose lattices do not match, for reasons such as the twisting of atomic layers.