Other ERP studies have reported diverse neurophysiological responses to inconsistencies between the message meaning and the speaker’s representation, typically manifest as a modulation of the N400 and/or P600 components15,16,17. Different patterns of ERP results reported in these studies are likely related to the nature of the mismatch manipulations used. For instance, whereas the P600 component is typically associated with a reanalysis/repair of syntactic incongruences and grammatical violations18, in experiments modulating the speaker’s voice it can also be elicited by the violations of the stereotypical noun roles in the absence of grammatical incongruencies as such (e.g., “face powder” or “fight club”, produced by male and female voices, respectively16) as well as the general assumptions based on the pronoun processing during sentence comprehension19. In contrast, the semantically-related N400 effect has been typically found for the semantic-pragmatic incongruences (e.g., “I am going to the night club” by child’s voice17).

Interestingly, these ERP effects offer support to two models of pragmatic language comprehension—the standard, two-step model and the one-step model. The two-step model claims that listeners compute meaning first, in isolation, and that the communicative context is considered at the second stage (speaker’s information, in particular16,20), as reflected in the late P600 responses. More recent findings showed, however, that this pragmatic (extralinguistic) integration is likely happening in a single-step manner already during semantic processing, as reflected in the N400 effect17,21. Nevertheless, other studies also reported the overlap of both processing stages, showing an N400 effect elicited by expectation error and a late P600 effect for overall reanalysis of this expectation22.

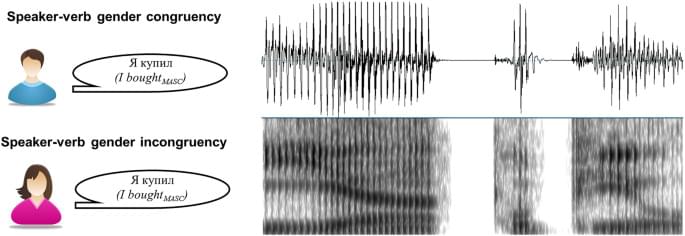

Understanding how gender information is integrated by the listeners is particularly important when one considers the differences in how different languages signal grammatical gender. In some languages, such as in English, Finnish or Mandarin, overt grammatical gender marking is almost completely absent. Many other languages, such as Slavic languages, explicitly mark grammatical gender in nouns, verbs, and adjectives, often in a complicated interdependent manner. Russian is one of such languages, offering an optimal testbed for investigating linguistic and extralinguistic gender integration. As far as we know, there is only one study addressing this question in a Slavic language: using Slovak, Hanulíková Carreiras23 found that, during an active-listening task, the integration of speaker-related information and morphosyntactic information occurred rather late during complex sentence processing. Additionally, a conflict between the speaker’s and the word’s genders (e.g., “I– \(stole_{MASC}\) plums” in female voice) was reflected in the modulation of the N400 component. Given that N400/LAN modulations have been consistently found for morphosyntactic violations, in particular for number, person, and gender agreement, as well as in phrase structure violations (e.g.,24, see also for review25), this result may suggest that extralinguistic information is directly integrated during online (morpho)syntactic processing (such as speaker’s sex converted into subject’s gender in (morpho)syntactic processing). However, N400 is also known to be related to conscious top-down controlled integration of linguistic information24,26. Indeed, in the study described above, the participant’s overt attention to the stimuli was required, and the effect generally appeared rather late in the comprehension processes. Thus, the question of whether such findings reflect the involvement of genuine online parsing mechanisms or secondary post-comprehension processes (such as repair and reanalysis24,27) still remains unsolved. Importantly, syntactic parsing has been shown to commence much earlier and to take place in a largely automatic fashion, as demonstrated in studies focused on early left-anterior negativity (ELAN) or syntactic MMN. In particular, ELAN modulation around 200 ms or earlier has been reported during outright violations of the obligatory structure, reflecting an automatic early analysis of the syntactic structure like phrase structure errors28,29,30,31, and it is considered to reflect the brain’s response to the word category violations.