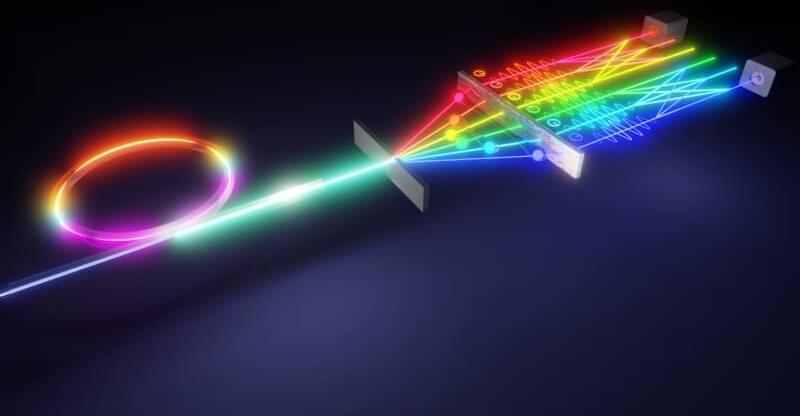

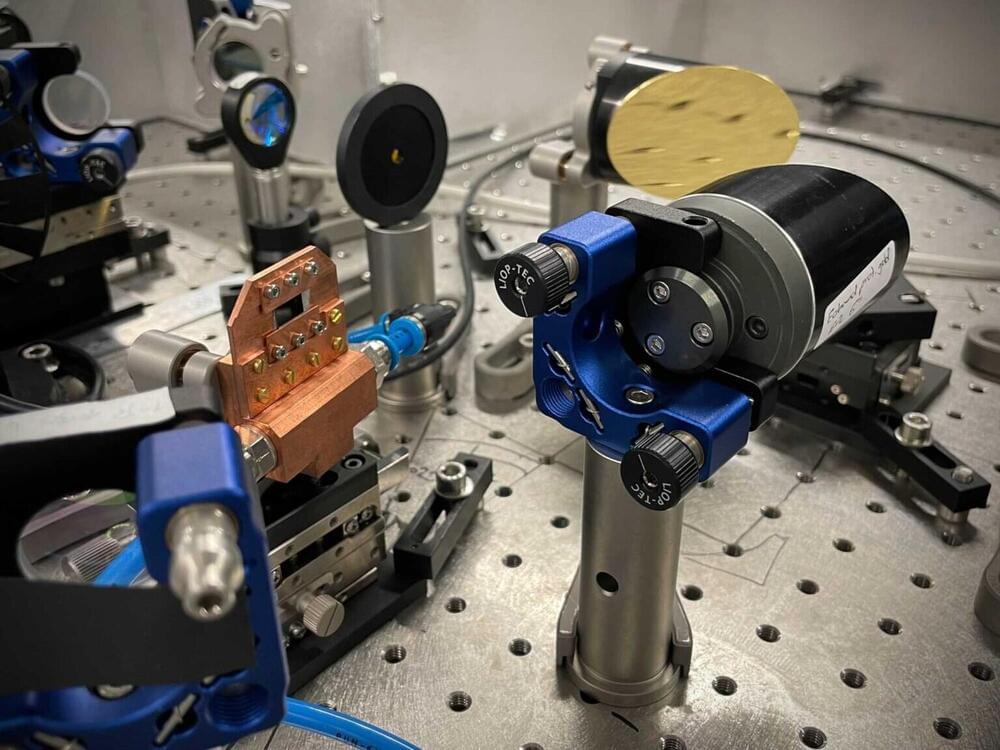

Using existing experimental and computational resources, a multi-institutional team has developed an effective method for measuring high-dimensional qudits encoded in quantum frequency combs, which are a type of photon source, on a single optical chip.

Although the word “qudit” might look like a typo, this lesser-known cousin of the qubit, or quantum bit, can carry more information and is more resistant to noise—both of which are key qualities needed to improve the performance of quantum networks, quantum key distribution systems and, eventually, the quantum internet.

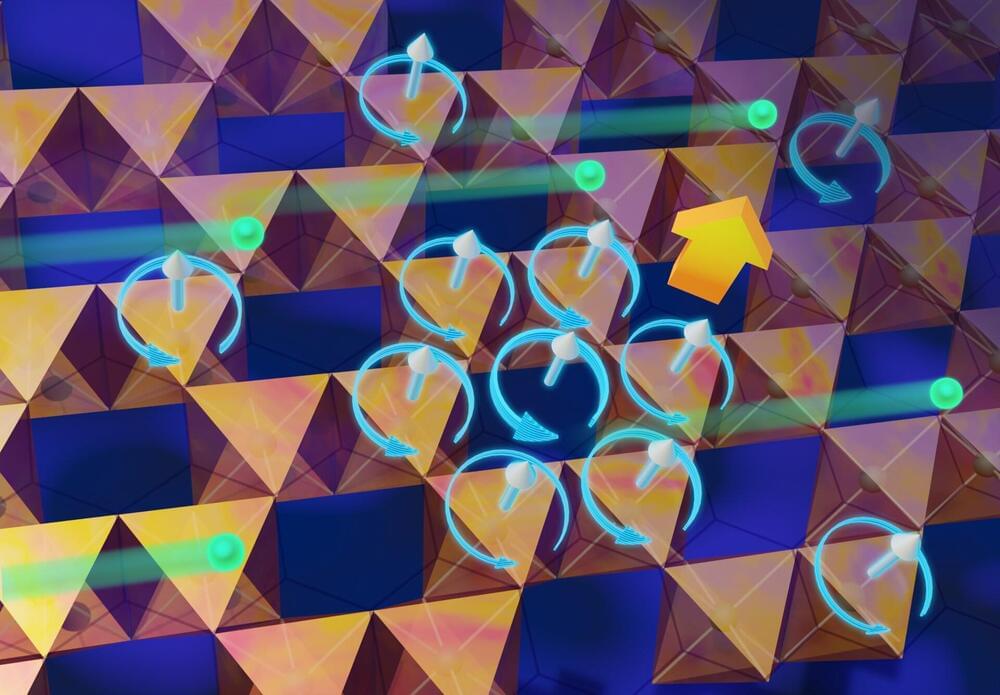

Classical computer bits categorize data as ones or zeroes, whereas qubits can hold values of one, zero or both—simultaneously—owing to superposition, which is a phenomenon that allows multiple quantum states to exist at the same time. The “d” in qudit stands for the number of different levels or values that can be encoded on a photon. Traditional qubits have two levels, but adding more levels transforms them into qudits.