By Sieglinde Pfaendler, Omar Costa Hamido, Eduardo Reck Miranda

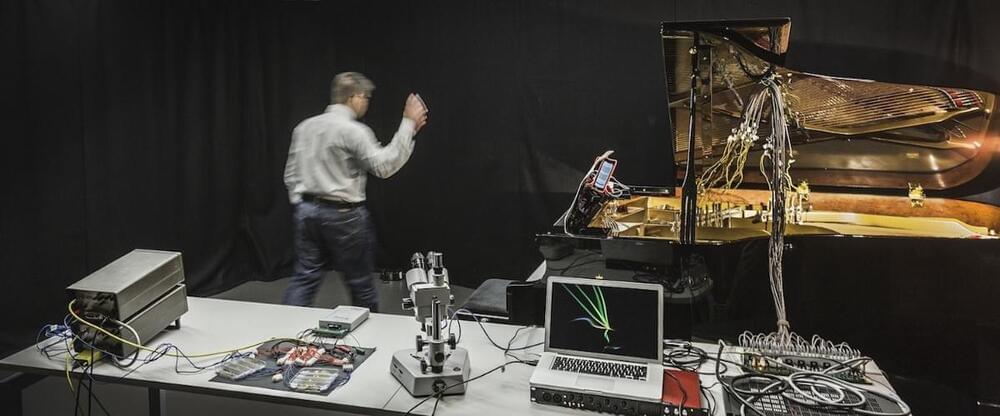

Science and the arts have increasingly inspired each other. In the 20th century, this has led to new innovations in music composition, new musical instruments, and changes to the way that the music industry does business to day. In turn, art has helped scientists think in new ways, and make advances of their own.

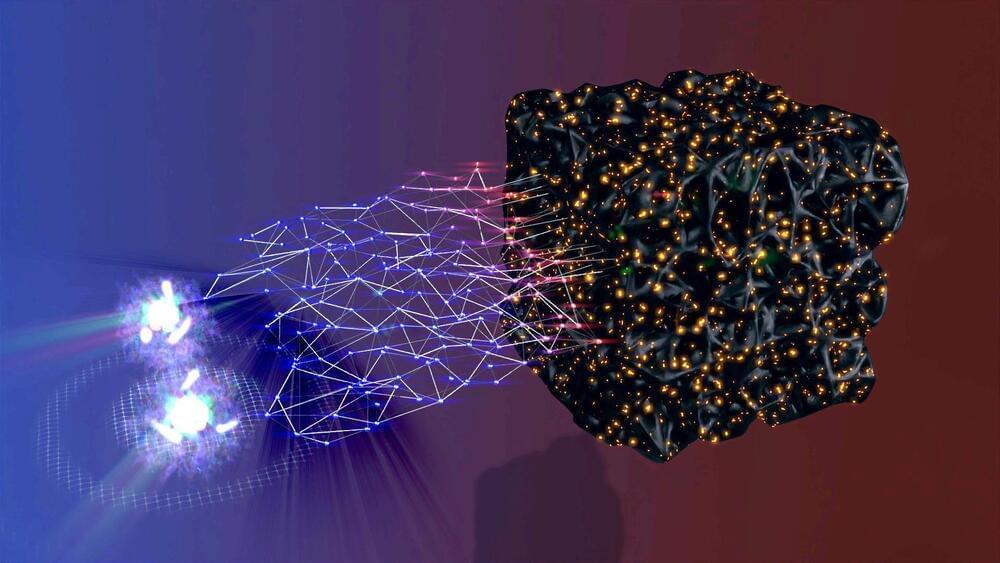

An emerging community leveraging quantum computing in music and the music industry has inspired us to organize the “1st International Symposium on Quantum Computing and Musical Creativity.” This symposium will bring together pioneering individuals from academia, industry, and music. They will present research, new works, share ideas, and learn new tools for incorporating quantum computation into music and the music industry. This symposium was made possible through the funding of the QuTune Project kindly provided by the United Kingdom National Quantum Technologies Programme’s Quantum Computing and Simulation Hub (QCS Hub).