Circa 2018

Digitization results in a high energy consumption. In industrialized countries, information technology presently has a share of more than 10% in total power consumption. The transistor is the central element of digital data processing in computing centers, PCs, smartphones, or in embedded systems for many applications from the washing machine to the airplane. A commercially available low-cost USB memory stick already contains several billion transistors. In the future, the single-atom transistor developed by Professor Thomas Schimmel and his team at the Institute of Applied Physics (APH) of KIT might considerably enhance energy efficiency in information technology. “This quantum electronics element enables switching energies smaller than those of conventional silicon technologies by a factor of 10,000,” says physicist and nanotechnology expert Schimmel, who conducts research at the APH, the Institute of Nanotechnology (INT), and the Material Research Center for Energy Systems (MZE) of KIT. Earlier this year, Professor Schimmel, who is considered the pioneer of single-atom electronics, was appointed Co-Director of the Center for Single-Atom Electronics and Photonics established jointly by KIT and ETH Zurich.

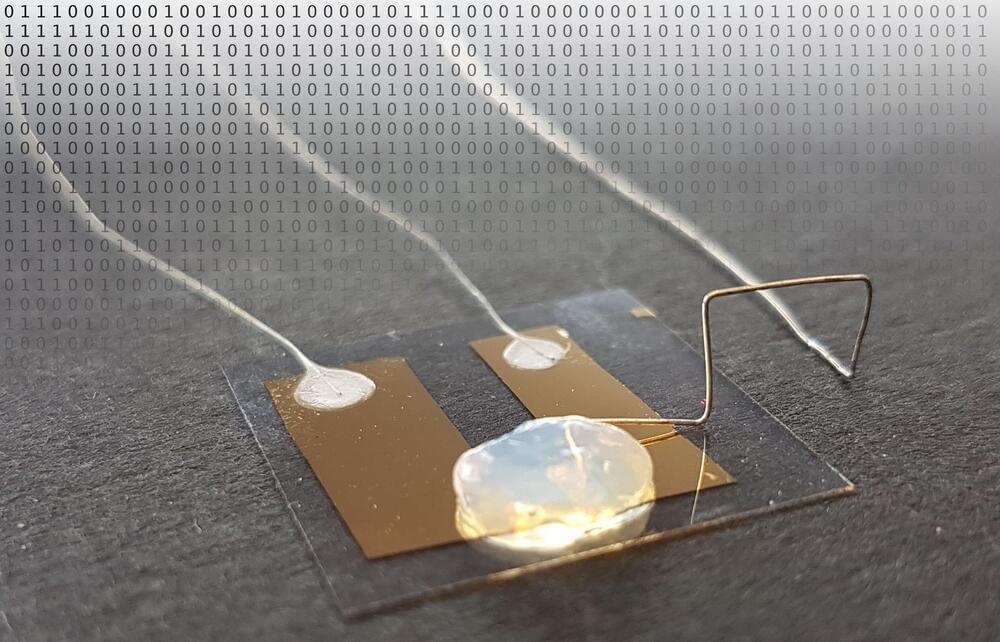

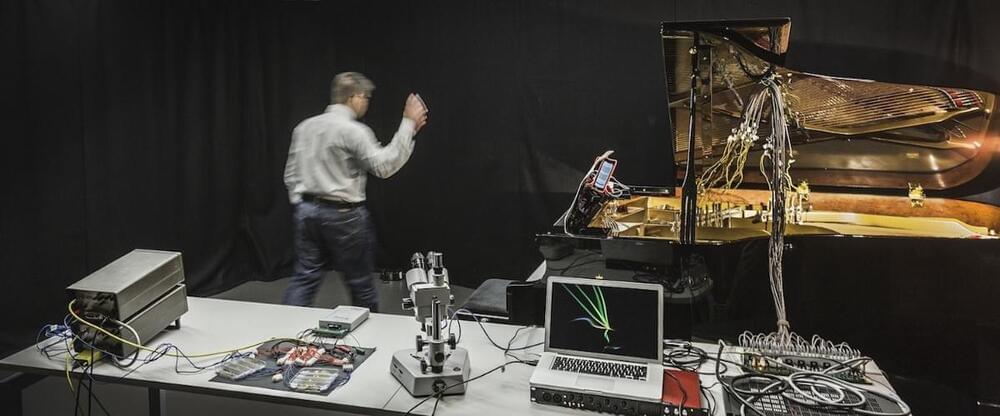

In Advanced Materials, the KIT researchers present the transistor that reaches the limits of miniaturization. The scientists produced two minute metallic contacts. Between them, there is a gap as wide as a single metal atom. “By an electric control pulse, we position a single silver atom into this gap and close the circuit,” Professor Thomas Schimmel explains. “When the silver atom is removed again, the circuit is interrupted.” The world’s smallest transistor switches current through the controlled reversible movement of a single atom. Contrary to conventional quantum electronics components, the single-atom transistor does not only work at extremely low temperatures near absolute zero, i.e.-273°C, but already at room temperature. This is a big advantage for future applications.

The single-atom transistor is based on an entirely new technical approach. The transistor exclusively consists of metal, no semiconductors are used. This results in extremely low electric voltages and, hence, an extremely low energy consumption. So far, KIT’s single-atom transistor has applied a liquid electrolyte. Now, Thomas Schimmel and his team have designed a transistor that works in a solid electrolyte. The gel electrolyte produced by gelling an aqueous silver electrolyte with pyrogenic silicon dioxide combines the advantages of a solid with the electrochemical properties of a liquid. In this way, both safety and handling of the single-atom transistor are improved.