A century after the quantum revolution, a lot of uncertainty remains.

Category: quantum physics – Page 688

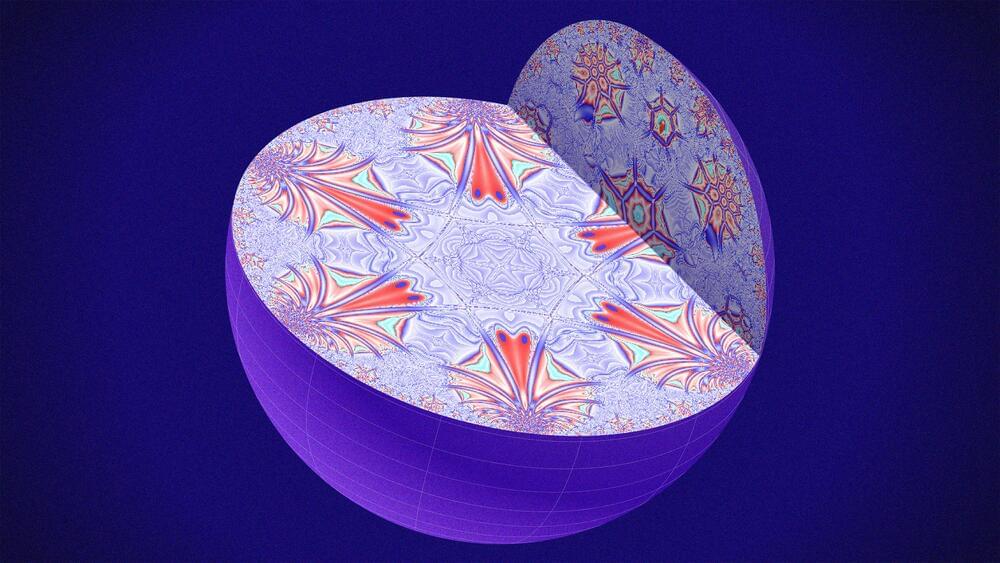

Symmetries Reveal Clues About the Holographic Universe

We’ve known about gravity since Newton’s apocryphal encounter with the apple, but we’re still struggling to make sense of it. While the other three forces of nature are all due to the activity of quantum fields, our best theory of gravity describes it as bent space-time. For decades, physicists have tried to use quantum field theories to describe gravity, but those efforts are incomplete at best.

One of the most promising of those efforts treats gravity as something like a hologram — a three-dimensional effect that pops out of a flat, two-dimensional surface. Currently, the only concrete example of such a theory is the AdS/CFT correspondence, in which a particular type of quantum field theory, called a conformal field theory (CFT), gives rise to gravity in so-called anti-de Sitter (AdS) space. In the bizarre curves of AdS space, a finite boundary can encapsulate an infinite world. Juan Maldacena, the theory’s discoverer, has called it a “universe in a bottle.”

But our universe isn’t a bottle. Our universe is (largely) flat. Any bottle that would contain our flat universe would have to be infinitely far away in space and time. Physicists call this cosmic capsule the “celestial sphere.”

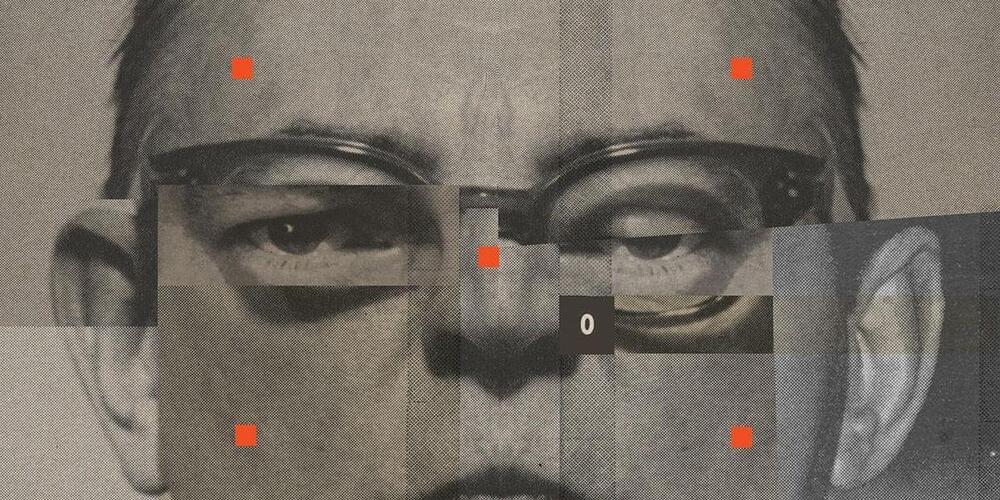

AI’s 6 Worst-Case Scenarios

However, as Malcolm Murdock, machine-learning engineer and author of the 2019 novel The Quantum Price, puts it, “AI doesn’t have to be sentient to kill us all. There are plenty of other scenarios that will wipe us out before sentient AI becomes a problem.”

“We are entering dangerous and uncharted territory with the rise of surveillance and tracking through data, and we have almost no understanding of the potential implications.” —Andrew Lohn, Georgetown University.

In interviews with AI experts, IEEE Spectrum has uncovered six real-world AI worst-case scenarios that are far more mundane than those depicted in the movies. But they’re no less dystopian. And most don’t require a malevolent dictator to bring them to full fruition. Rather, they could simply happen by default, unfolding organically—that is, if nothing is done to stop them. To prevent these worst-case scenarios, we must abandon our pop-culture notions of AI and get serious about its unintended consequences.

Quantum computing companies to see real-world use cases in 2022

Quantum computing is finally making its presence felt among companies around the world. Over the last few years, companies have shown interest in quantum computing but often couldn’t make definitive decisions on using the technology, as there was not enough research on its practical applications beyond the theoretical.

Nevertheless, 2021 has been a remarkable year for the quantum computing industry. Not only has there been more research on the potential use cases for the technology, but investments in quantum computing have shot up globally to boot.

While the US and China continue to compete with each other for supremacy in this evolving branch of computing, other countries and organizations around the world have slowly been playing catch up as well. And now, 2022 is expected to be the year whereby companies can start seeing quantum computing breakthroughs that could result in practical uses.

Scientists Say the Universe Itself May Be “Pixelated”

Here’s a brain teaser for you: scientists are suggesting spacetime may be made out of individual “spacetime pixels,” instead of being smooth and continuous like it seems.

Rana Adhikari, a professor of physics at Caltech, suggested in a new press blurb that these pixels would be “so small that if you were to enlarge things so that it becomes the size of a grain of sand, then atoms would be as large as galaxies.”

Adhikari’s goal is to reconcile the conventional laws of physics, as determined by general relativity, with the more mysterious world of quantum physics.

Is Space Pixelated? The Quest for Quantum Gravity

Sand dunes seen from afar seem smooth and unwrinkled, like silk sheets spread across the desert. But a closer inspection reveals much more. As you approach the dunes, you may notice ripples in the sand. Touch the surface and you would find individual grains. The same is true for digital images: zoom far enough into an apparently perfect portrait and you will discover the distinct pixels that make the picture.

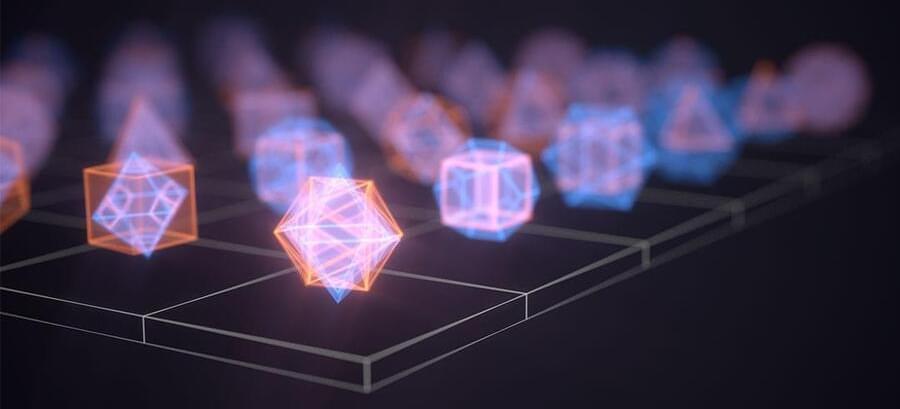

These Will Be the Earliest Use Cases for Quantum Computers

Quantum computers will not be general-purpose machines, though. They will be able to solve some calculations that are completely intractable for current computers and dramatically speed up processing for others. But many of the things they excel at are niche problems, and they will not replace conventional computers for the vast majority of tasks.

That means the ability to benefit from this revolution will be highly uneven, which prompted analysts at McKinsey to investigate who the early winners could be in a new report. They identified the pharmaceutical, chemical, automotive, and financial industries as those with the most promising near-term use cases.

The authors take care to point out that making predictions about quantum computing is hard because many fundamental questions remain unanswered; for instance, the relative importance of the quantity and quality of qubits or whether there can be practical uses for early devices before they achieve fault tolerance.

Exotic Forces: Do Tractor Beams Break the Laws of Physics?

It depends.

Warp drive. Site-to-site transporter technology. A vast network of interstellar wormholes that take us to bountiful alien worlds. Beyond a hefty holiday wish-list, the ideas presented to us in sci-fi franchises like Gene Roddenberry’s “Star Trek” have inspired countless millions to dream of a time when humans have used technology to rise above the everyday limits of nature, and explore the universe.

But to guarantee the shortest path to turning at least some of these ideas into genuine scientific breakthroughs, we need to push ideas like general relativity to the breaking point. Tractor beams, one of the most exotic ideas proposed by the genre that involves manipulating space-time to pull or push objects at a distance, take us beyond the everyday paradigm of science, to the very edge of theoretical physics. And, a team of scientists examined how they might work in a recent study shared on a preprint server.

“In researching sci-fi ideas like tractor beams, the goal is to push and try to find a demarcation point where something more is needed, like quantum gravity,” said Sebastian Schuster, a scientist with a doctorate in mathematical physics from the Charles University of Prague, in an interview with IE. And, in finding out if tractor beams can work, we might also uncover even more exotic forces, like quantum gravity. So strap in.

Full Story:

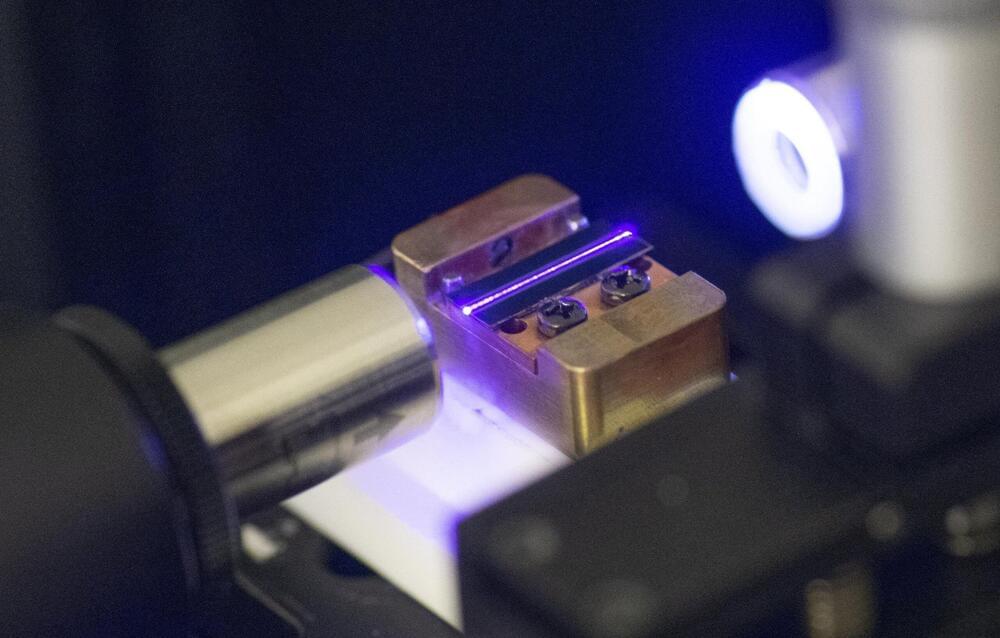

Qubits Can Be as Safe as Bits, Researchers Show

Over the centuries, we have learned to put information into increasingly durable and useful form, from stone tablets to paper to digital media. Beginning in the 1980s, researchers began theorizing about how to store the information inside a quantum computer, where it is subject to all sorts of atomic-scale errors. By the 1990s they had found a few methods, but these methods fell short of their rivals from classical (regular) computers, which provided an incredible combination of reliability and efficiency.

Now, in a preprint posted on November 5, Pavel Panteleev and Gleb Kalachev of Moscow State University have shown that — at least, in theory — quantum information can be protected from errors just as well as classical information can. They did it by combining two exceptionally compatible classical methods and inventing new techniques to prove their properties.

“It’s a huge achievement by Pavel and Gleb,” said Jens Eberhardt of the University of Wuppertal in Germany.