The 2021 National Research Infrastructure Roadmap has identified priorities for future investment in Australia’s national research infrastructure.

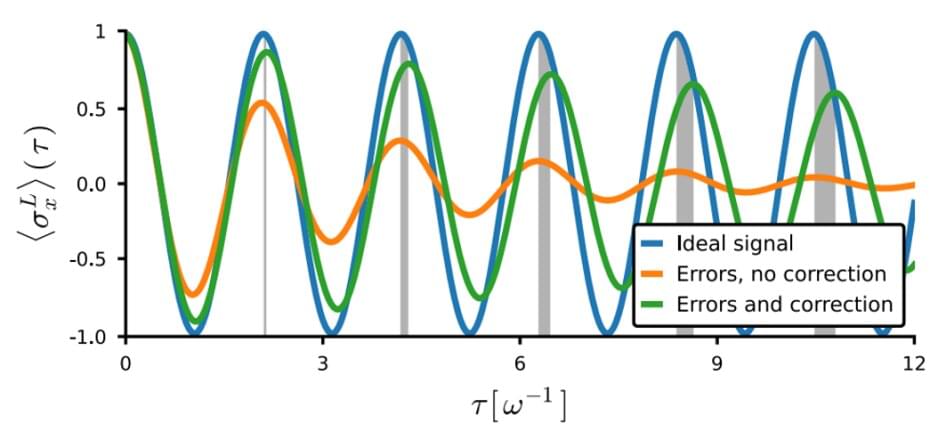

It is well established that quantum error correction can improve the performance of quantum sensors. But new theory work cautions that unexpectedly, the approach can also give rise to inaccurate and misleading results—and shows how to rectify these shortcomings.

Quantum systems can interact with one another and with their surroundings in ways that are fundamentally different from those of their classical counterparts. In a quantum sensor, the particularities of these interactions are exploited to obtain characteristic information about the environment of the quantum system—for instance, the strength of a magnetic and electric field in which it is immersed. Crucially, when such a device suitably harnesses the laws of quantum mechanics, then its sensitivity can surpass what is possible, even in principle, with conventional, classical technologies.

Unfortunately, quantum sensors are exquisitely sensitive not only to the physical quantities of interest, but also to noise. One way to suppress these unwanted contributions is to apply schemes collectively known as quantum error correction (QEC). This approach is attracting considerable and increasing attention, as it might enable practical high-precision quantum sensors in a wider range of applications than is possible today. But the benefits of error-corrected quantum sensing come with major potential side effects, as a team led by Florentin Reiter, an Ambizione fellow of the Swiss National Science Foundation working in the group of Jonathan Home at the Institute for Quantum Electronics, has now found. Writing in Physical Review Letters, they report theoretical work in which they show that in realistic settings QEC can distort the output of quantum sensors and might even lead to unphysical results.

We study the question of how to decompose Hilbert space into a preferred tensor-product factorization without any pre-existing structure other than a Hamiltonian operator, in particular the case of a bipartite decomposition into “system” and “environment.” Such a decomposition can be defined by looking for subsystems that exhibit quasi-classical behavior. The correct decomposition is one in which pointer states of the system are relatively robust against environmental monitoring (their entanglement with the environment does not continually and dramatically increase) and remain localized around approximately-classical trajectories. We present an in-principle algorithm for finding such a decomposition by minimizing a combination of entanglement growth and internal spreading of the system. Both of these properties are related to locality in different ways.

When I first started looking into quantum computing I had a fairly pessimistic view about its near-term commercial prospects, but I’ve come to think we’re only a few years away from seeing serious returns on the technology.

ab initio calculations

Classical computing is of very little help when the task to be accomplished pertains to ab initio calculations. With quantum computing in place, you have a quantum system simulating another quantum system. Furthermore, tasks such as modelling atomic bonding or estimating electron orbital overlaps can be done much more precisely.

►Is faster-than-light (FTL) travel possible? In most discussions of this, we get hung up on the physics of particular ideas, such as wormholes or warp drives. But today, we take a more zoomed out approach that addresses all FTL propulsion — as well as FTL messaging. Because it turns out that they all allow for time travel. Join us today as we explore why this is so and the profound consequences that ensue. Special thanks to Prof Matt.

Written & presented by Prof David Kipping. Special thanks to Prof Matt Buckley for fact checking and his great blog article that inspired this video (http://www.physicsmatt.com/blog/2016/8/25/why-ftl-implies-time-travel)

→ Support our research program: https://www.coolworldslab.com/support.

→ Get Stash here! https://teespring.com/stores/cool-worlds-store.

THANK-YOU to our supporters D. Smith, M. Sloan, C. Bottaccini, D. Daughaday, A. Jones, S. Brownlee, N. Kildal, Z. Star, E. West, T. Zajonc, C. Wolfred, L. Skov, G. Benson, A. De Vaal, M. Elliott, B. Daniluk, M. Forbes, S. Vystoropskyi, S. Lee, Z. Danielson, C. Fitzgerald, C. Souter, M. Gillette, T. Jeffcoat, H. Jensen, J. Rockett, N. Fredrickson, D. Holland, E. Hanway, D. Murphree, S. Hannum, T. Donkin, K. Myers, A. Schoen, K. Dabrowski, J. Black, R. Ramezankhani, J. Armstrong, K. Weber, S. Marks, L. Robinson, F. Van Exter, S. Roulier, B. Smith, P. Masterson, R. Sievers, G. Canterbury, J. Kill, J. Cassese, J. Kruger, S. Way, P. Finch, S. Applegate, L. Watson, T. Wheeler, E. Zahnle, N. Gebben, J. Bergman, E. Dessoi, J. Alexander, C. Macdonald, M. Hedlund, P. Kaup, C. Hays, S. Krasner, W. Evans, J. Curtin, J. Sturm, RAND Corp, T. Kordell, T. Ljungberg & M. Janke.

::References::

► Alcubierre, M., 1994, “The warp drive: hyper-fast travel within general relativity”, Classical and Quantum Gravity, 11 L73: https://arxiv.org/abs/gr-qc/0009013

► Pfenning, M. & Ford, L., 1997, “The unphysical nature of Warp Drive”, Classical and Quantum Gravity, 14, 1743: https://arxiv.org/abs/gr-qc/9702026

► Finazzi, S., Liberati, S., Barceló, C., 2009, “Semiclassical instability of dynamical warp drives”, Physical Review D., 79, 124017: https://arxiv.org/abs/0904.0141

► McMonigal, B., Lewis, G., O’Byrne, P., 2012, “Alcubierre warp drive: On the matter of matter”, Physical Review D., 85, 064024: https://arxiv.org/abs/1202.5708

► Everett, A., 1996, “Warp drive and causality”, Physical Review D, 53, 7365: https://journals.aps.org/prd/abstract/10.1103/PhysRevD.53.

::Music::

A new method of identifying gravitational wave signals using quantum computing could provide a valuable new tool for future astrophysicists.

A team from the University of Glasgow’s School of Physics & Astronomy have developed a quantum algorithm to drastically cut down the time it takes to match gravitational wave signals against a vast databank of templates.

This process, known as matched filtering, is part of the methodology that underpins some of the gravitational wave signal discoveries from detectors like the Laser Interferometer Gravitational Observatory (LIGO) in America and Virgo in Italy.

In the new field of quantum computing, magnetic interactions could play a role in relaying quantum information.

In new research, Argonne scientists achieved efficient quantum coupling between two distant magnetic devices, which which may be useful for creating new quantum information technology devices — https://bit.ly/3uk88Q3