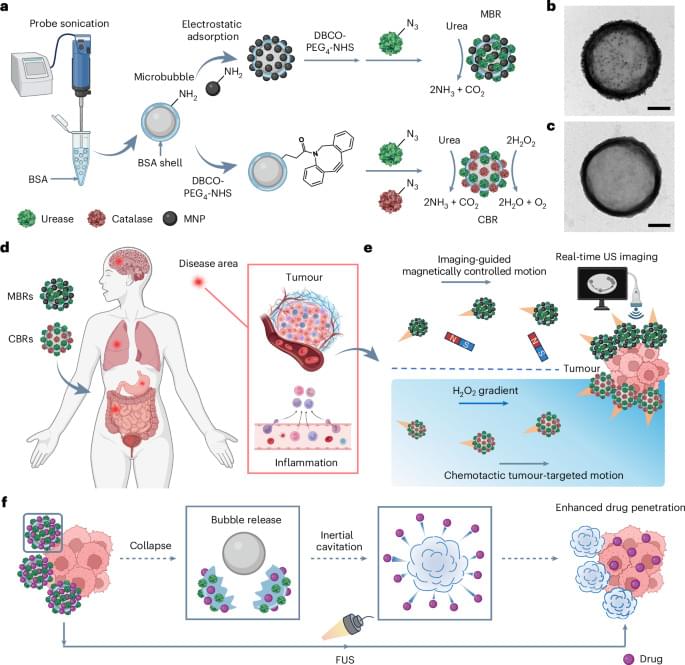

In order to build the computers and devices of tomorrow, we have to understand how they use energy today. That’s harder than it sounds. Memory storage, information processing, and energy use in these technologies involve constant energy flow—systems never settle into thermodynamic balance. To complicate things further, one of the most precise ways to study these processes starts at the smallest scale: the quantum domain.

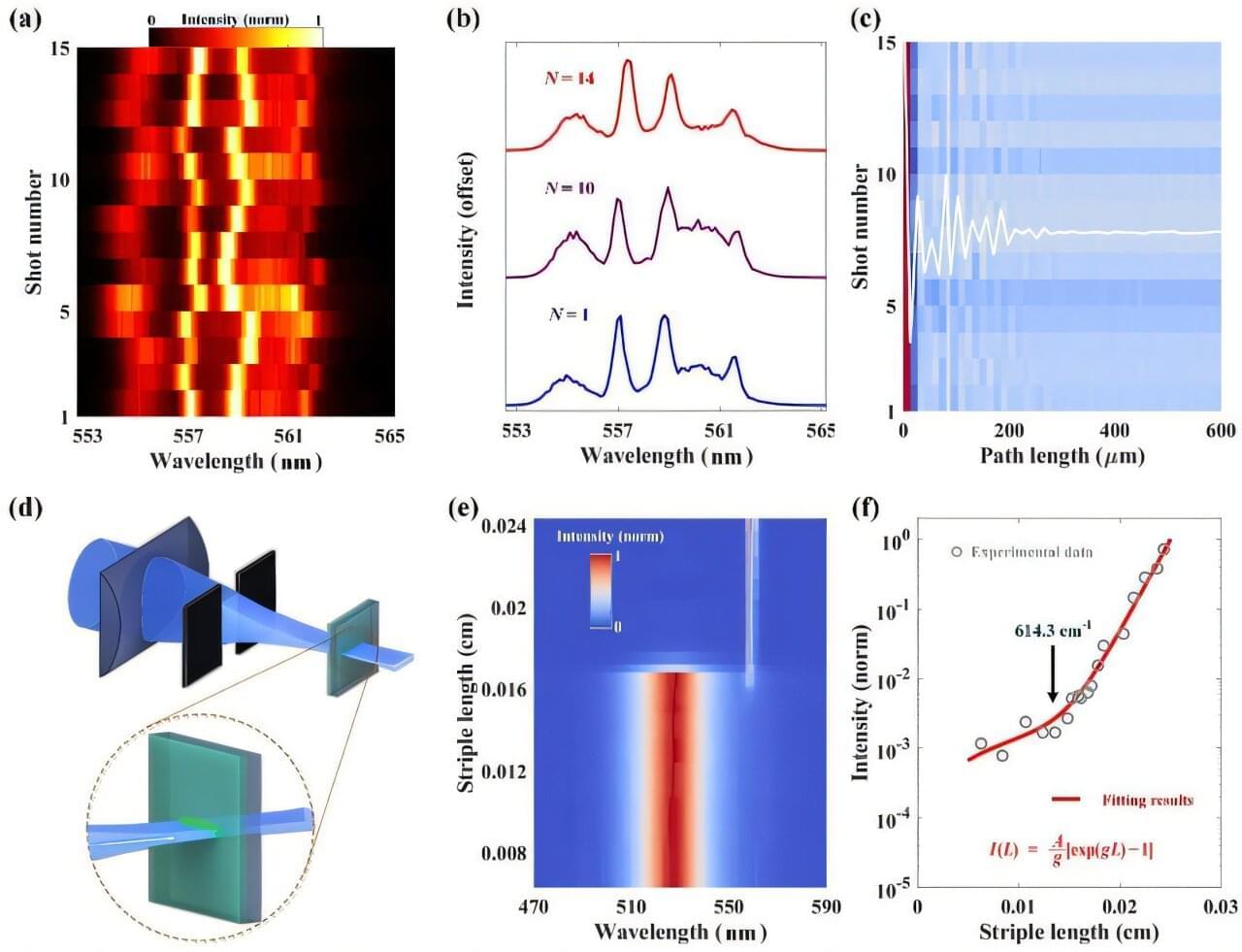

New Stanford research published in Nature Physics combines theory, experimentation, and machine learning to quantify energy costs during a non-equilibrium process with ultrahigh sensitivity. Researchers used extremely small nanocrystals called quantum dots, which have unique light-emitting properties that arise from quantum effects at the nanoscale.

They measured the entropy production of quantum dots—a quantity that describes how reversible a microscopic process is, and encodes information about memory, information loss, and energy costs. Such measurements can determine the ultimate speed limits for a device or how efficient it can be.