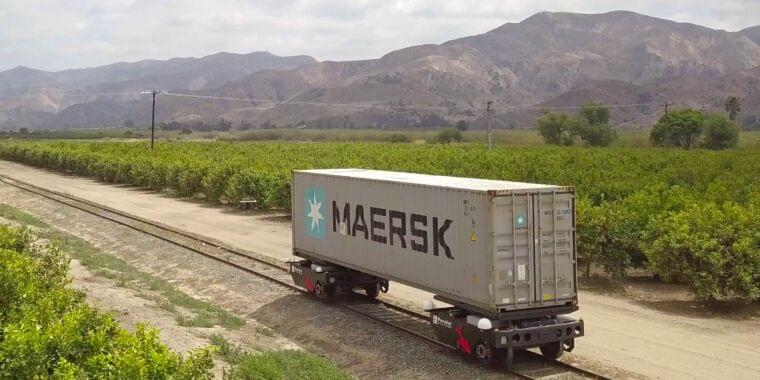

Mile-long, slow-moving diesel trains loaded with cargo chugging slowly across the U.S. could be a thing of the past one day if stealth startup Parallel Systems has its way. The Los Angeles company thinks the future of freight lies in autonomous battery-powered trains that squeeze far more capacity out of existing rail lines.

Founded by a trio of former SpaceX engineers, including CEO Matt Soule, Parallel’s idea for smaller, flexible zero-emission trains pulling no more than 50 cars and operating with greater frequency than traditional behemoths that haul over 150 boxcars at a time caught the attention of tech-oriented venture firms, including Anthos Capital, Congruent Ventures, Riot Ventures and Embark Ventures. With their backing and from other investors, Parallel just raised $49.6 million to refine prototypes and software for its futuristic trains and, eventually, shift more freight hauling from trucks.

Full Story:

L.A.-based Parallel Systems, which just raised $50 million, thinks it can squeeze far more capacity out of existing rail lines.