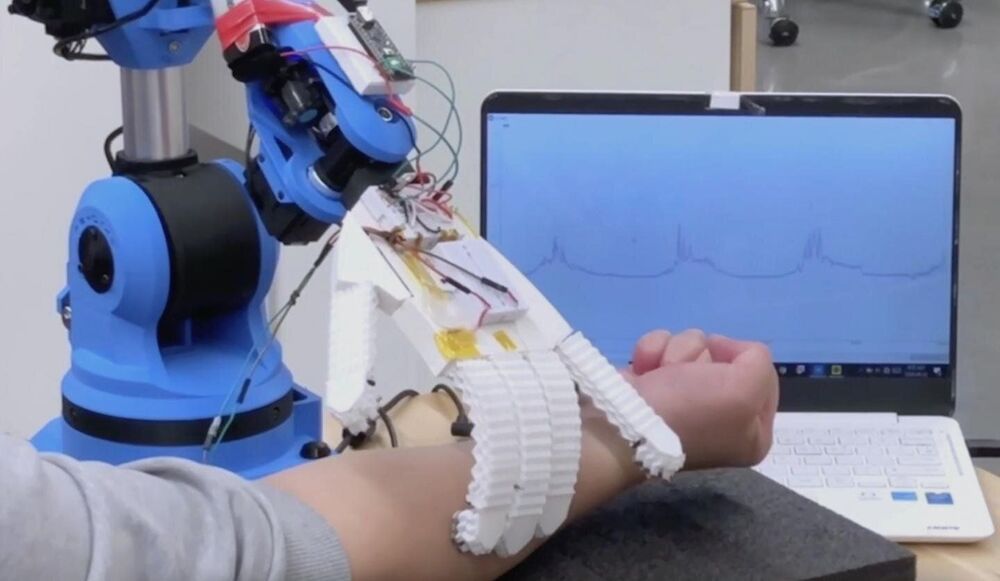

Japanese space startup Gitai has raised a $17.1 million funding round, a Series B financing for the robotics startup. This new funding will be used for hiring, as well as funding the development and execution of an on-orbit demonstration mission for the company’s robotic technology, which will show its efficacy in performing in-space satellite servicing work. That mission is currently set to take place in 2023.

Gitai will also be staffing up in the U.S., specifically, as it seeks to expand its stateside presence in a bid to attract more business from that market.

“We are proceeding well in the Japanese market, and we’ve already contracted missions from Japanese companies, but we haven’t expanded to the U.S. market yet,” explained Gitai founder and CEO Sho Nakanose in an interview. So we would like to get missions from U.S. commercial space companies, as a subcontractor first. We’re especially interested in on-orbit servicing, and we would like to provide general-purpose robotic solutions for an orbital service provider in the U.S.”