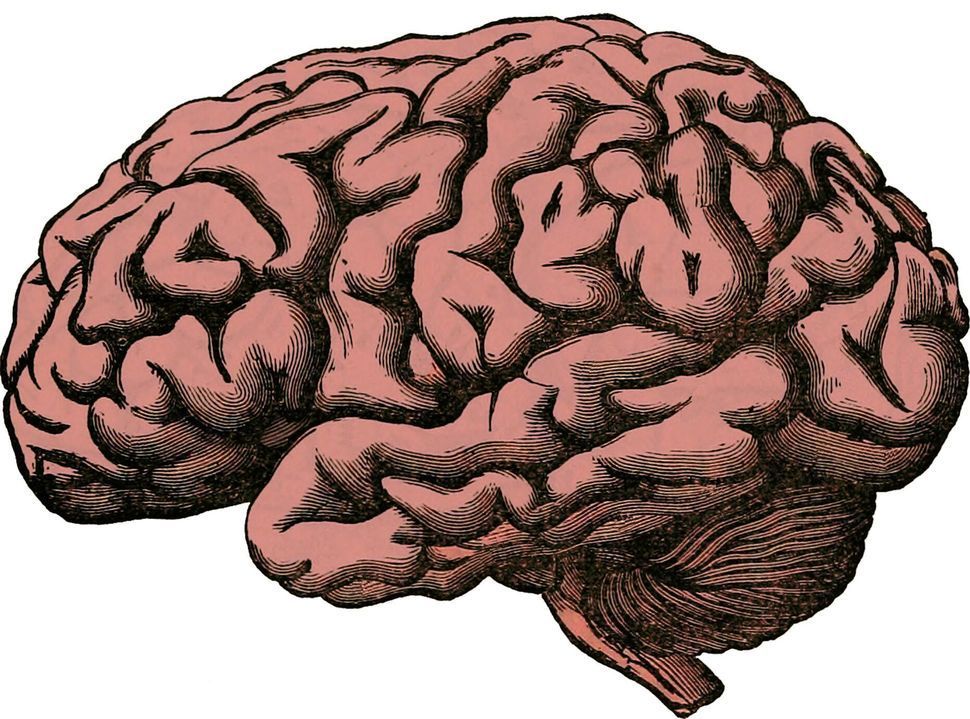

I recently came to an important realisation: I will most likely see the so-called Singularity happen in my own lifetime. I’m 56, at this time, and I believe that the inflection point at which computers, ‘thinking machines’ and AI may become infinitely and recursively powerful is no more than 20–25 years away, at most — and it might be as soon as 12–15 years from today.

We are at ‘4’ on the exponential curve, and this matters a lot because doubling a small number such as 0.01 does not make much of a difference while doubling 4–8–16–32–64 is another story altogether: timing is essential, and the future is bound to increasingly happen gradually, then suddenly.