Products Machine Learning Crash Course Courses Crash Course Introduction to Machine Learning This module introduces Machine Learning (ML). Estimated Time: 3 minutesLearning Objectives Recognize the practical benefits of mastering machine learning Understand the philosophy behind machine learning Int…

Category: robotics/AI – Page 2,412

NASA Will Flight Test a Nuclear Rocket by 2024 and Other High Tech NASA Projects

A portion of NASA’s $21.5 billion 2019 budget is for developing advanced space power and propulsion technology. NASA will spend $176 to $217 million on maturing new technology. There are projects that NASA has already been working on and others that NASA will start and try to complete. There will be propulsion, robotics, materials and other capabilities. Space technology received $926.9 million in NASA’s 2019 budget.

NASA’s space technology projects look interesting but ten times more resources devoted to advancing technological capability if the NASA budget and priorities were changed.

NASA is only spending 1 of its budget on advanced space power and propulsion technology. NASA will spend $3.5 billion in 2019 on the Space Launch System and Orion capsule. SLS will be a heavy rocket which will start off at around the SpaceX Heavy capacity and then get about the SpaceX Super Heavy Starship in payload capacity. However, the SLS will cost about $1 billion to launch each time which is about ten times more than SpaceX costs. NASA is looking at a 2021–2022 first launch and then a 2024 second launch. This would be $19+ billion from 2019–2024 to get two heavy launches and this is if there are no delays.

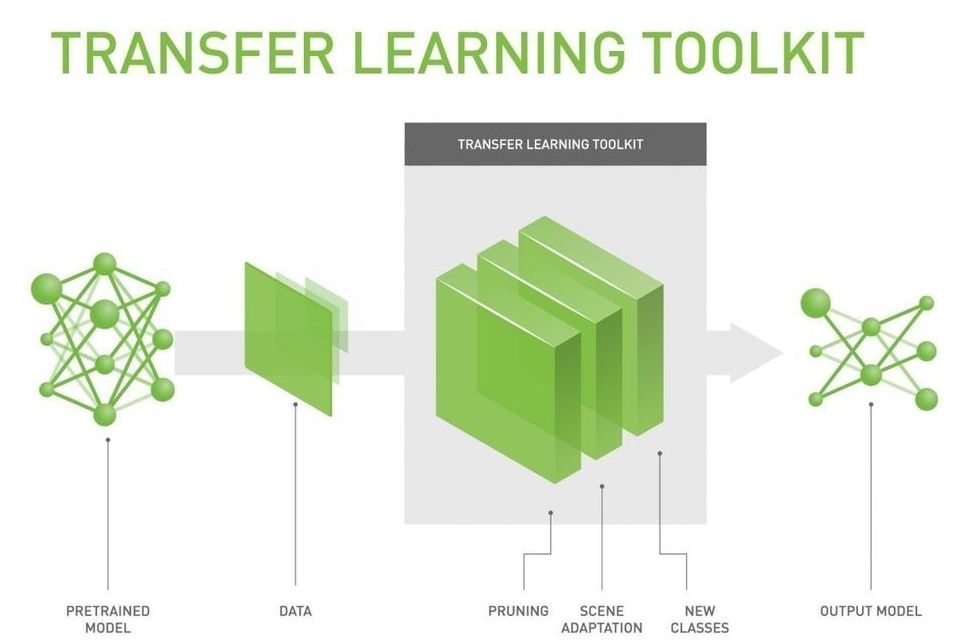

NVIDIA Transfer Learning Toolkit

The is ideal for deep learning application developers and data scientists seeking a faster and efficient deep learning training workflow for various industry verticals such as Intelligent Video Analytics (IVA) and Medical Imaging. Transfer Learning Toolkit abstracts and accelerates deep learning training by allowing developers to fine-tune NVIDIA provided pre-trained models that are domain specific instead of going through the time-consuming process of building Deep Neural Networks (DNNs) from scratch. The pre-trained models accelerate the developer’s deep learning training process and eliminate higher costs associated with large scale data collection, labeling, and training models from scratch.

The term “transfer learning” implies that you can extract learned features from an existing neural network and transfer these learned features by transferring weights from an existing neural network. The Transfer Learning Toolkit is a Python based toolkit that enables developers to take advantage of NVIDIA’s pre-trained models and offers technical capabilities for developers to add their own data to make the neural networks smarter by retraining and allowing them to adapt to the new network changes. The capabilities to simply add, prune and retrain networks improves the efficiency and accuracy for deep learning training workflow.

Meet the ‘preeminent AI company on earth,’ but can it succeed in healthcare?

Last year, my brother, then an employee at Silicon Valley-based tech company Nvidia, declared that all the AI and deep learning that is happening in healthcare is being powered by Nvidia’s graphics processing units (GPUs)…however that’s just the tip of the iceberg.

Nvidia holds a dominant position in terms of making the chips that power artificial intelligence projects, but can the Silicon Valley tech company with roots in the world of gaming and graphics succeed in healthcare?

New milestones in helping prevent eye disease with Verily

Over the last three years, Google and Verily—Alphabet’s life sciences and healthcare arm—have developed a machine learning algorithm to make it easier to screen for disease, as well as expand access to screening for DR and DME. As part of this effort, we’ve conducted a global clinical research program with a focus on India. Today, we’re sharing that the first real world clinical use of the algorithm is underway at the Aravind Eye Hospital in Madurai, India.

Google and Verily share updates to their initiative to diagnose diabetic eye disease leveraging machine learning.

Open-source software tracks neural activity in real time

“A software tool called CaImAn automates the arduous process of tracking the location and activity of neurons. It accomplishes this task using a combination of standard computational methods and machine-learning techniques. In a new paper, the software’s creators demonstrate that CaImAn achieves near-human accuracy in detecting the locations of active neurons based on calcium imaging data.”

Journal Article: https://www.simonsfoundation.org/…/caiman-calcium-imaging-…/

The ‘Digit’ robot could be the future of humanoid pizza deliveries

The Atlas robot has some new bipedal competition.

FedEx turns to Segway inventor to build delivery robot

FedEx is the latest company to join the delivery robot craze.

The company said Wednesday it will test a six-wheeled, autonomous robot called the SameDay Bot in Memphis, Tenn. this summer and plans to expand to more cities.

It’s partnering with major brands, including Walmart, Target, Pizza Hut and AutoZone, to understand how delivery robots could help other businesses.

Researchers develop a fleet of 16 miniature cars for cooperative driving experiments

A team of researchers at The University of Cambridge has recently introduced a unique experimental testbed that could be used for experiments in cooperative driving. This testbed, presented in a paper pre-published on arXiv, consists of 16 miniature Ackermann-steering vehicles called Cambridge Minicars.

“Using true-scale facilities for vehicle testbeds is expensive and requires a vast amount of space,” Amanda Prorok. “Our main objective was to build a low-cost, multi-vehicle experimental setup that is easy to maintain and that is easy to use to prototype new autonomous driving algorithms. In particular, we were interested in testing and tangibly demonstrating the benefits of cooperative driving on multi-lane road topographies.”

Studies investigating cooperative driving are often expensive and time consuming due to a lack of available low-cost platforms that researchers can use to test their systems and algorithms. Prorok and her colleagues thus set out to develop an effective and inexpensive experimental testbed that could ultimately support research into cooperative driving and multi-car navigation.