The vision of human-level machine intelligence laid out by Alan Turing in the 1950s is now a reality. Eyes unclouded by dread or hype will help us to prepare for what comes next.

I’m Gemechu. I’m a software engineer and AI builder finishing my Master’s in CS at LMU Los Angeles this May.

I’m looking to join a team, full-time or internship!

For context, I have hands-on experience shipping AI-powered products to production. I recently built https://www.vehique.ai/, a conversational vehicle marketplace from scratch — designed the multi-agent architecture, built the full stack, and scaled it to over 3,000 monthly users. Prior to that I have a couple of research engineering experiences at seed-stage startups.

Have experience building end to end, whether that’s the AI layer, backend and infra, or full-stack product work.

Looking to join where I can create impactful products.

If you’re hiring or know someone who might be, please feel free to reach out.

🌿 All my projects and experience are on my portfolio — https://gemechu.xyz/

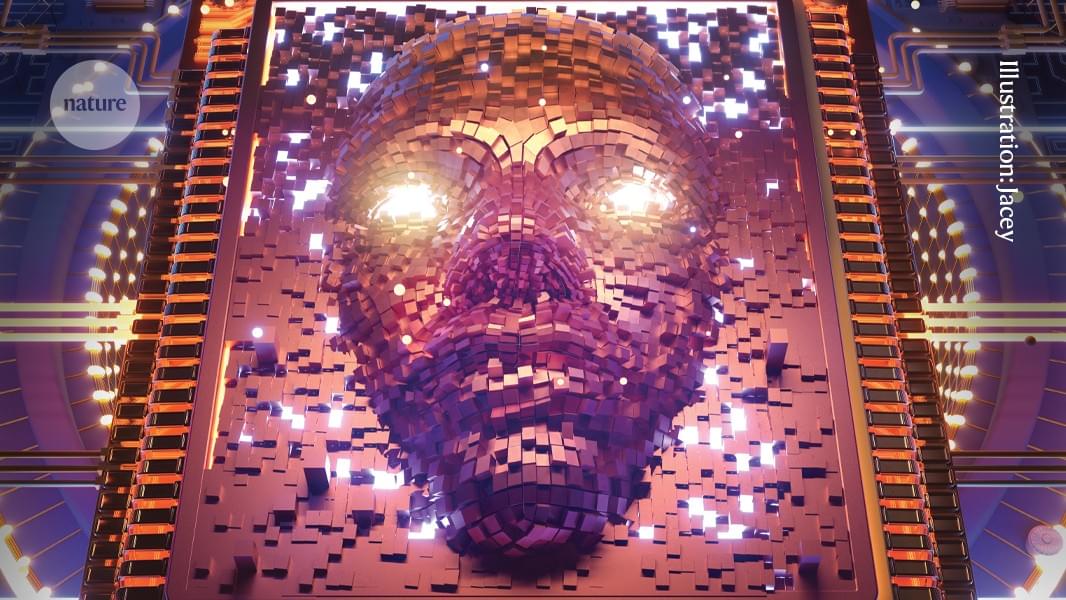

Emerging technologies from AI and extended reality to edge computing, digital twins, and more are driving big changes in the manufacturing world.

Download the February 2026 issue of the Enterprise Spotlight from the editors of CIO, Computerworld, CSO, InfoWorld, and Network World and learn about the new tech at the forefront of innovation and how it is poised to reshape how companies operate, compete, and deliver value in a rapidly evolving digital landscape.

Please see my LinkedIn article: “Securing the Neural Frontier.”

We are poised to witness one of the most significant technological advancements in human history: the direct interaction between human brains and machines. Brain-computer interfaces (BCIs), neurotechnology, and brain-inspired computing have already arrived and need to be secure.

Link.

NVIDIA CEO Jensen Huang discusses how artificial intelligence is advancing and handling competition with China on ‘Maria Bartiromo’s Wall Street.’ #fox #media #breakingnews #us #usa #new #news #breaking #foxbusiness #nvidia #ai #technology #tech #artificialintelligence #innovation #business #china #competition #jensenhuang #huang #ceo #economy #global #future.

Watch more Fox Business Video: https://video.foxbusiness.com.

Watch Fox Business Network Live: http://www.foxnewsgo.com/

FOX Business Network (FBN) is a financial news channel delivering real-time information across all platforms that impact both Main Street and Wall Street. Headquartered in New York — the business capital of the world — FBN launched in October 2007 and is one of the leading business networks on television. In 2025 it opened the year posting double-digit advantages across business day, market hours and total day viewers in January. Additionally, the network continued to lead business news programming, with each business day program placing among the top 15 shows, while FBN delivered its highest-rated month since April 2023 with market hours.

Follow Fox Business on Facebook: / foxbusiness.

Follow Fox Business on Twitter: / foxbusiness.

Follow Fox Business on Instagram: / foxbusiness.

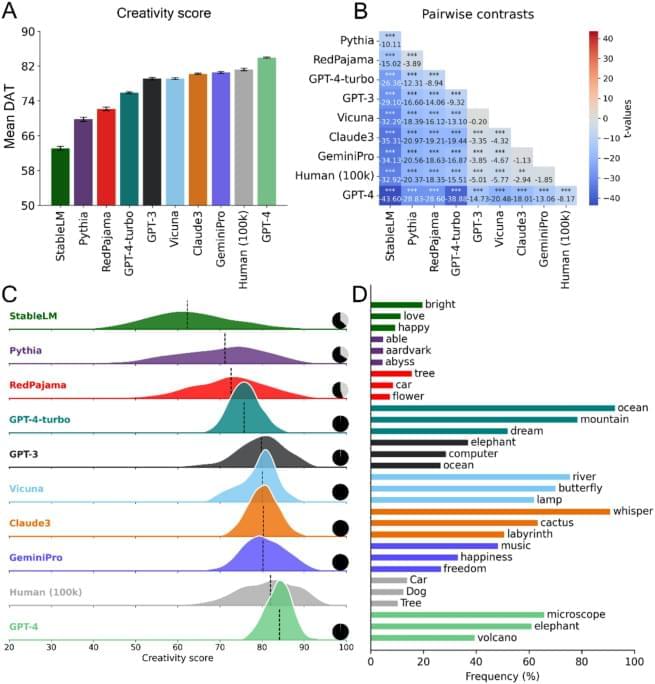

Scientific Reports volume 16, Article number: 1,279 (2026) Cite this article.

Apple’s blockbuster holiday quarter was impressive — but it shouldn’t give cover to avoid an AI reckoning. Also: A new MacBook Pro is planned for the macOS 26.3 release cycle; the company explores a clamshell follow-up to its upcoming foldable phone; and an updated AirTag finally rolls out.

Last week in Power On: Inside Apple’s AI shake-up and its plans for two new versions of Siri powered by Gemini.