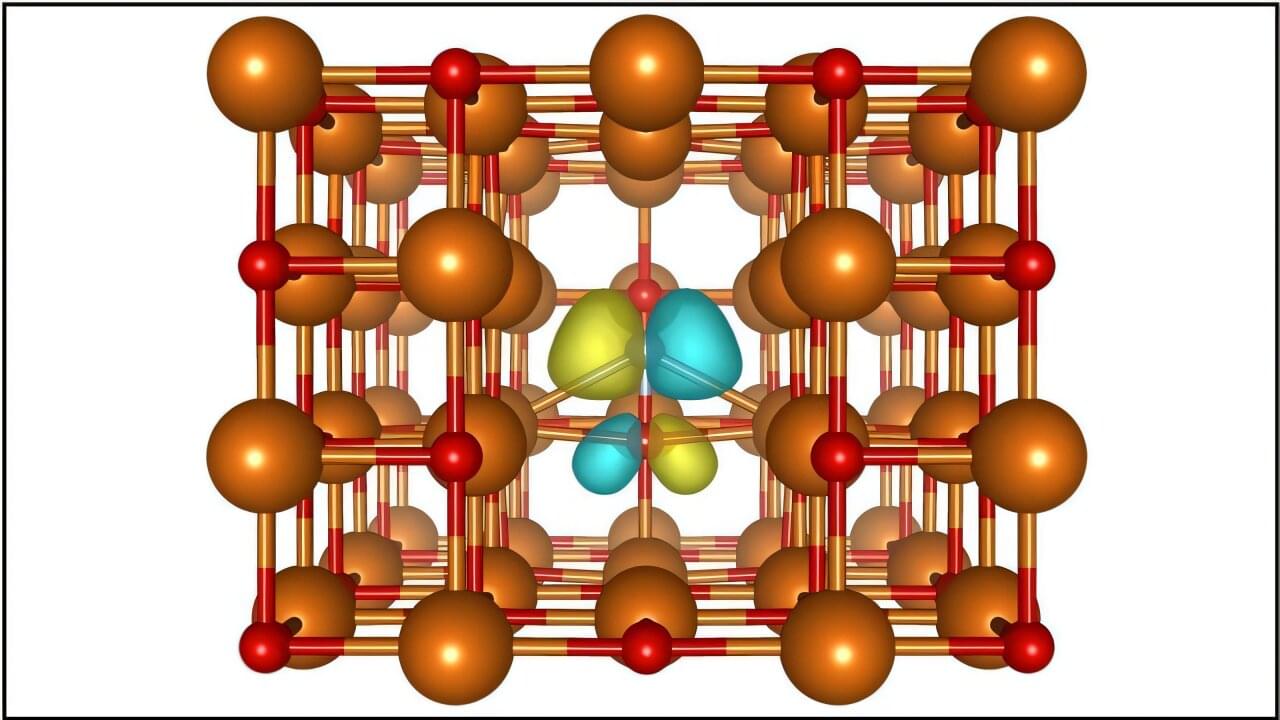

Scientists have been studying a fascinating material called uranium ditelluride (UTe₂), which becomes a superconductor at low temperatures.

Superconductors can carry electricity without any resistance, and UTe₂ is special because it might belong to a rare type called spin-triplet superconductors. These materials are not only resistant to magnetic fields but could also host exotic quantum states useful for future technologies.

However, one big mystery remained: what is the symmetry of UTe₂’s superconducting state? This symmetry determines how electrons pair up and move through the material. To solve this puzzle, researchers used a highly sensitive tool called a scanning tunneling microscope (STM) with a superconducting tip. They found unique signals—zero-energy surface states—that helped them compare different theoretical possibilities.

Their results suggest that UTe₂ is a nonchiral superconductor, meaning its electron pairs don’t have a preferred handedness (like left-or right-handedness). Instead, the data points to one of three possible symmetries (B₁ᵤ, B₂ᵤ, or B₃ᵤ), with B₃ᵤ being the most likely if electrons scatter in a particular way along one axis.

This discovery brings scientists closer to understanding UTe₂’s unusual superconducting behavior, which could one day help in designing more robust quantum materials.

UTe₂ currently operates at very low temperatures (~1.6 K), so raising its critical temperature is a major goal.

Scaling up production and integrating it into devices will require further material engineering.