The best supercomputers in the world have less than 50,000 GPUs, how in the world is someone going to make an AI cluster with 1.2 million GPUs?

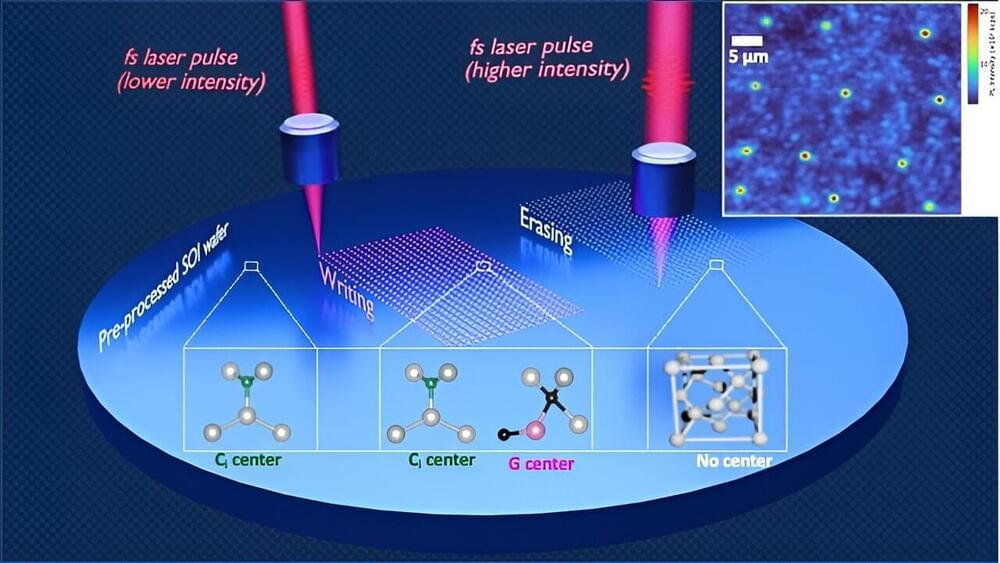

The result is a significant advancement in the field, showcasing the practical applicability of quantum computing in solving complex material science problems. Furthermore, the researchers discovered factors that can improve the durability and energy efficiency of quantum memory devices. The findings have been published in Nature Communications.

In the early 1980s, Richard Feynman asked whether it was possible to model nature accurately using a classical computer. His answer was: no. The world consists of fundamental particles, described by the principles of quantum physics. The exponential growth of the variables that must be included in the calculations pushes even the most powerful supercomputers to their limits. Instead, Feynman suggested using a computer that was itself made up of quantum particles. With his vision, Feynman is considered by many to be the Father of Quantum Computing.

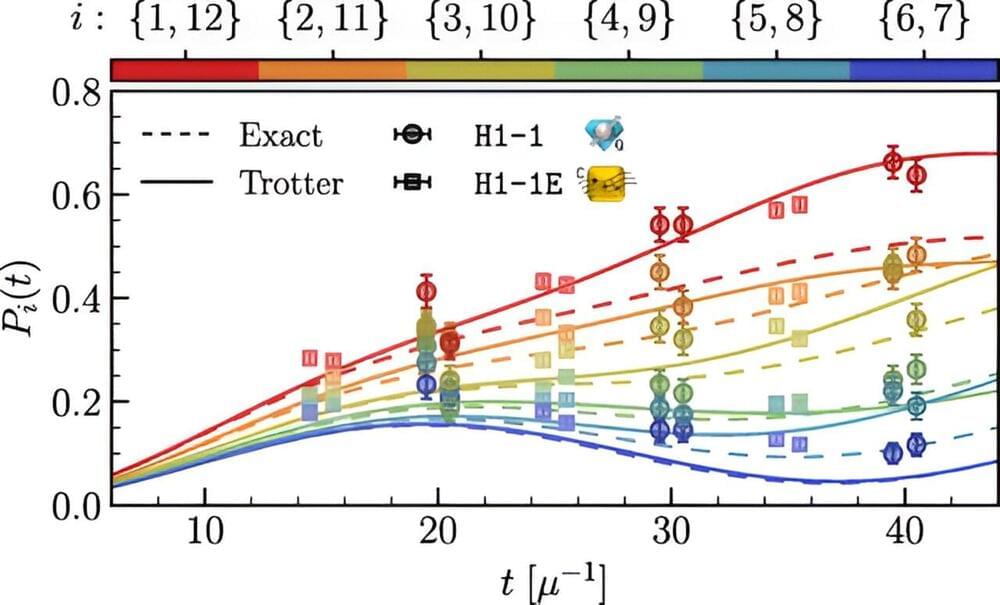

Scientists at Forschungszentrum Jülich, together with colleagues from Slovenian institutions, have now shown that this vision can actually be put into practice. The application they are looking at is a so-called many-body system. Such systems describe the behavior of a large number of particles that interact with each other.

“At this point, the neutrinos go from passive particles—almost bystanders—to major elements that help drive the collapse,” Savage said. “Supernovae are interesting for a variety of reasons, including as sites that produce heavy elements such as gold and iron. If we can better understand neutrinos and their role in the star’s collapse, then we can better determine and predict the rate of events such as a supernova.”

Scientists seldom observe a supernova close-up, but researchers have used classical supercomputers such as ORNL’s Summit to model aspects of the process. Those tools alone wouldn’t be enough to capture the quantum nature of neutrinos.

“These neutrinos are entangled, which means they’re interacting not just with their surroundings and not just with other neutrinos but with themselves,” Savage said.

Quantum computers have the potential to solve complex problems in human health, drug discovery, and artificial intelligence millions of times faster than some of the world’s fastest supercomputers. A network of quantum computers could advance these discoveries even faster. But before that can happen, the computer industry will need a reliable way to string together billions of qubits—or quantum bits—with atomic precision.

Memphis may get most powerful super computer yet.

Memphis, Tennessee, may host the world’s largest supercomputer, the “Gigafactory of Compute.”:

The Memphis Shelby County Economic Development Growth Engine (EDGE), Tennessee Valley Authority (TVA), and governing authorities hold the key to finalizing the project. If approved, it would be the largest investment in Memphis history.

According to Memphis Mayor Paul Young, the city boasts “an ideal site, ripe for investment,” coupled with a skilled workforce that can “keep up with the pace required to land this transformational project, the Business Insider reported.

The supercomputer itself is expected to be a technological marvel. It will be powered by 100,000 Nvidia’s H100 GPUs, currently some of the most sought-after chips in the AI industry, as reported by The Information.

@NVIDIAOmniverse is a development platform for virtual world simulation, combining real-time physically based rendering, physics simulation, and generative AI technologies.

In Omniverse, robots can learn to be robots – minimizing the sim-to-real gap, and maximizing the transfer of learned behavior.

Building robots with generative physical AI requires three computers:

- NVIDIA AI supercomputers to train the models.

- NVIDIA Jetson Orin, and next generation Jetson Thor robotics supercomputer, to run the models.

- And NVIDIA Omniverse, where robots can learn and refine their skills in simulated worlds.

Read the press release.

#OpenUSD #Robotics #COMPUTEX2024 #IsaacSim

One way to manage the unsustainable energy requirements of the computing sector is to fundamentally change the way we compute. Superconductors could let us do just that.

Superconductors offer the possibility of drastically lowering energy consumption because they do not dissipate energy when passing current. True, superconductors work only at cryogenic temperatures, requiring some cooling overhead. But in exchange, they offer virtually zero-resistance interconnects, digital logic built on ultrashort pulses that require minimal energy, and the capacity for incredible computing density due to easy 3D chip stacking.

Are the advantages enough to overcome the cost of cryogenic cooling? Our work suggests they most certainly are. As the scale of computing resources gets larger, the marginal cost of the cooling overhead gets smaller. Our research shows that starting at around 10 16 floating-point operations per second (tens of petaflops) the superconducting computer handily becomes more power efficient than its classical cousin. This is exactly the scale of typical high-performance computers today, so the time for a superconducting supercomputer is now.

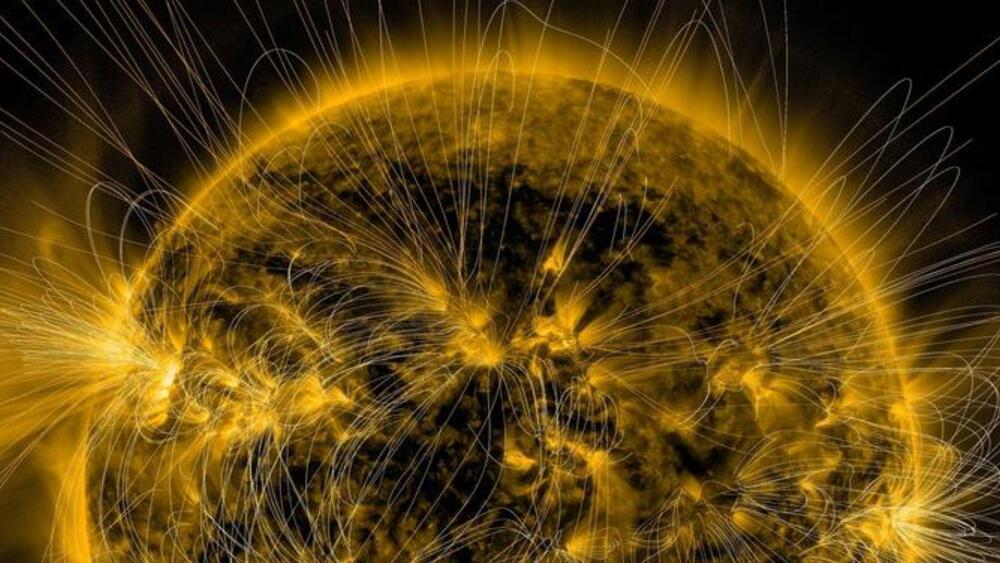

A new study reveals the sun’s magnetic field originates closer to the surface, solving a 400-year-old mystery first probed by Galileo and enhancing solar storm forecasting.

An international team of researchers, including Northwestern University engineers, is getting closer to solving a 400-year-old solar mystery that stumped even famed astronomer Galileo Galilei.

Since first observing the sun’s magnetic activity, astronomers have struggled to pinpoint where the process originates. Now, after running a series of complex calculations on a NASA supercomputer, the researchers discovered the magnetic field is generated about 20,000 miles below the sun’s surface.