The Standard Model of Particle Physics encapsulates nearly everything we know about the tiny quantum-scale particles that make up our everyday world. It is a remarkable achievement, but it’s also incomplete — rife with unanswered questions. To fill the gaps in our knowledge, and discover new laws of physics beyond the Standard Model, we must study the exotic phenomena and states of matter that don’t exist in our everyday world. These include the high-energy collisions of particles and nuclei that take place in the fiery heart of stars, in cosmic ray events occurring all across earth’s upper atmosphere, and in particle accelerators like the Large Hadron Collider (LHC) at CERN or the Relativistic Heavy Ion Collider at Brookhaven National Laboratory.

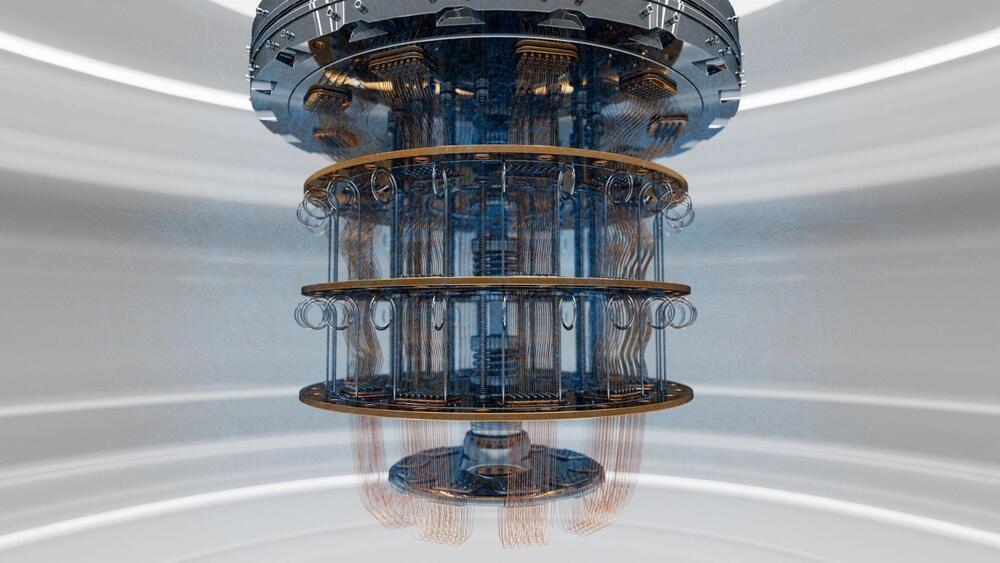

Computer simulations of fundamental physics processes play an essential role in this research, but many important questions require simulations that are much too complex for even the most powerful classical supercomputers. Now that utility-scale quantum computers have demonstrated the ability to simulate quantum systems at a scale beyond exact or “brute force” classical methods, researchers are exploring how these devices might help us run simulations and answer scientific questions that are inaccessible to classical computation. In two recent papers published in PRX Quantum (PRX)1 and Physical Review D (PRD)2, our research group did just that, developing scalable techniques for simulating the real-time dynamics of quantum-scale particles using the IBM® fleet of utility-scale, superconducting quantum computers.

The techniques we’ve developed could very well serve as the building blocks for future quantum computer simulations that are completely inaccessible to both exact and even approximate classical methods — simulations that would demonstrate what we call “quantum advantage” over all known classical techniques. Our results provide clear evidence that such simulations are potentially within reach of the quantum hardware we have today.