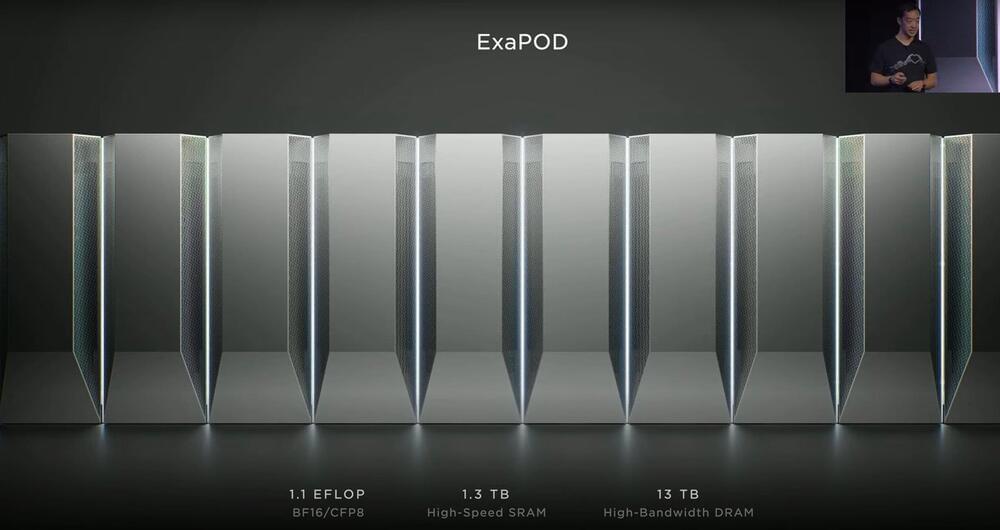

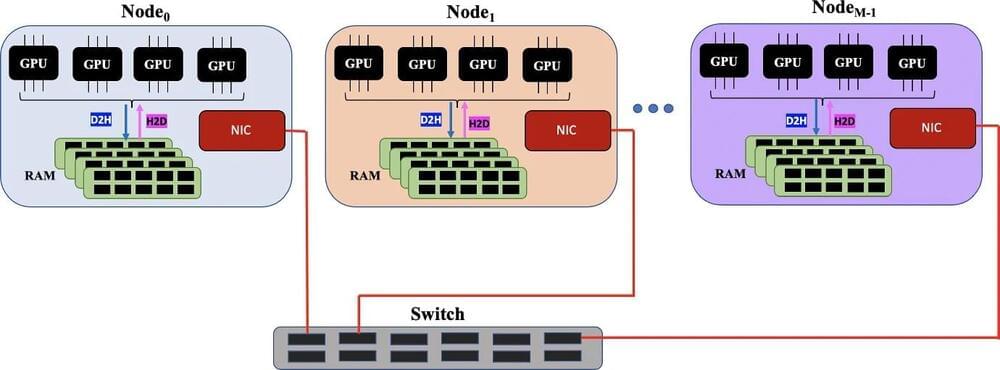

Tesla is reportedly increasing the orders for its Dojo D1 supercomputer chips. The D1 is a custom Tesla application-specific integrated circuit (ASIC) that’s designed for the Dojo supercomputer, and it is reportedly ordered from Taiwan Semiconductor Manufacturing Company (TSMC).

Citing a source reportedly familiar with the matter, Taiwanese publication Economic Daily noted that Tesla will be doubling its Dojo D1 chip to 10,000 units for the coming year. Considering the Dojo supercomputer’s scalability, expectations are high that the volume of D1 chip orders from TSMC will continue to increase until 2025.

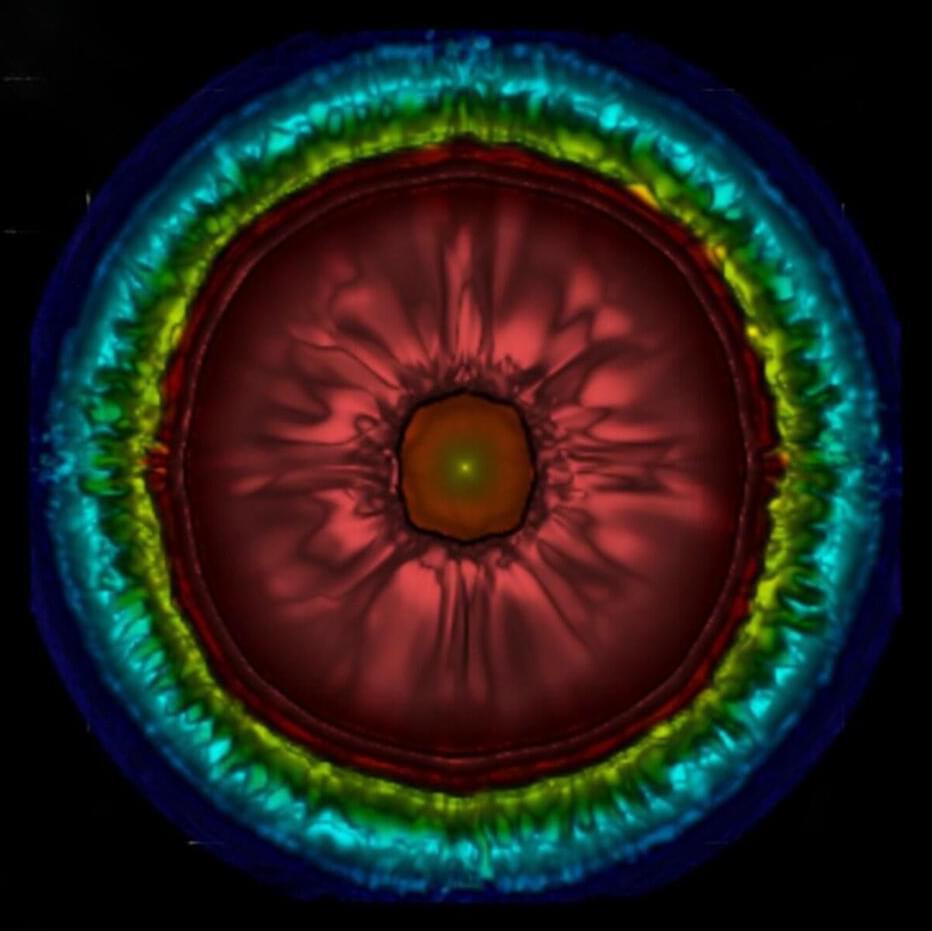

Dojo, after all, is expected to be used by Tesla for the training of its driver-assist systems and self-driving AI models. With the rollout of projects like FSD, the dedicated robotaxi, and Optimus, Dojo’s contributions to the company’s operations would likely be more substantial.