Get the latest international news and world events from around the world.

Why modern enterprises need to adopt cognitive computing for faster business growth in a digital economy

Cognitive computing (CC) technology revolves around making computers adept at mimicking the processes of the human brain, which is basically making them more intelligent. Even though the phrase cognitive computing is used synonymously with AI, the term is closely associated with IBM’s cognitive computer system, Watson. IBM Watson is a supercomputer that leverages AI-based disruptive technologies like machine learning (ML), real-time analysis, natural language processing, etc. to augment decision making and deliver superior outcomes.

The Internet Knows You Better Than Your Spouse Does

The traces we leave on the Web and on our digital devices can give advertisers and others surprising, and sometimes disturbing, insights into our psychology.

- By Frank Luerweg on March 14, 2019

Prototype watch uses your body to prevent hacking of wearables and implants

We’re used to the security risks posed by someone hacking into our computers, tablets, and smartphones, but what about pacemakers and other implanted medical devices? To help prevent possible murder-by-hacker, engineers at Purdue University have come up with a watch-like device that turns the human body into its own network as a way to keep personal technology private.

Enjin Plans to Mobilize 20 Million Gamers to Fight Aging

A collaboration between online technology company Enjin and the SENS Research Foundation has just been announced with the bold plan to mobilize a community of 20 million video gamers to help fight aging.

Enjin is a cryptocurrency and online video game company with a plan to change how donors and charities interact in a bid to make fundraising for globally important causes more effective.

This collaboration with SENS Research Foundation is the first program on the road to this goal. The project is essentially gamifying the fundraising experience to make it more fun and engaging for donors. To achieve this, donors get rewards for their donations in the form of blockchain-based collectibles known as “non-fungible tokens” (NFTs).

What If Google and the Government Merged?

My colleague Conor Sen recently made a bold prediction: Government will be the driver of the U.S. economy in coming decades. The era of Silicon Valley will end, supplanted by the imperatives of fighting climate change and competing with China.

This would be a momentous change. The biggest tech companies — Amazon.com, Apple Inc., Facebook Inc., Google (Alphabet Inc.) and (a bit surprisingly) Microsoft Corp. — have increasingly dominated both the headlines and the U.S. stock market:

To Compete With Google, OpenAI Seeks Investors–and Profits

On Monday, OpenAI’s leaders said that a paltry $1 billion wouldn’t be enough to compete with the well-resourced AI labs at companies such as Google and Facebook after all. They announced the new investment vehicle, a company called OpenAI LP, as a way to raise extra money for the computing power and people needed to steer the destiny of AI. Musk left the board of OpenAI last February and is not formally involved in OpenAI LP.

OpenAI, the independent research lab cofounded by Elon Musk, created a for-profit arm to attract more funding to hire researchers and run computers.

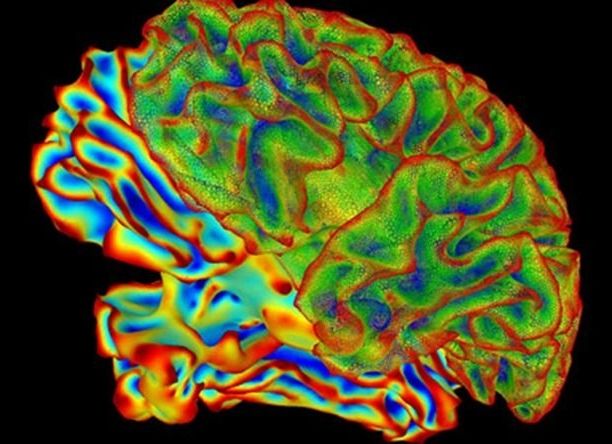

Fungi cause brain infection and memory impairment in mice

We are learning more about fungal infection and neurological diseases. Recently we learned of gingival diseases and Alzheimer’s. My wonder is how plants such as Moringa in one’s diet, that have antifungal properties, can help.

Fungal infections are emerging as a major medical challenge, and a team led by researchers at Baylor College of Medicine has developed a mouse model to study the short-term consequences of fungal infection in the brain.

The researchers report in the journal Nature Communications the unexpected finding that the common yeast Candida albicans, a type of fungus, can cross the blood-brain barrier and trigger an inflammatory response that results in the formation of granuloma-type structures and temporary mild memory impairments in mice. Interestingly, the granulomas share features with plaques found in Alzheimer’s disease, supporting future studies on the long-term neurological consequences of sustained C. albicans infection.

“An increasing number of clinical observations by us and other groups indicates that fungi are becoming a more common cause of upper airway allergic diseases such as asthma, as well as other conditions such as sepsis, a potentially life-threatening disease caused by the body’s response to an infection,” said corresponding author Dr. David B. Corry, professor of medicine-immunology, allergy and rheumatology and Fulbright Endowed Chair in Pathology at Baylor College of Medicine.