Find out more about the link between how oral bacteria can alter the balance between health and disease beyond the oral cavity.

Researchers at Massachusetts Institute of Technology (MIT) discovered that adding a highly conductive substance called carbon black to a water and cement mixture created a construction material that could also serve as a supercapacitor.

Supercapacitors can charge and discharge extremely efficiently but are typically not capable of storing energy for long amounts of time. So while they lack the functionality of traditional lithium-ion batteries – which are found in everything from smartphones to electric cars – they are a useful method of storing excess electricity generated from renewable energy sources like solar and wind.

Since first unveiling the technology last year, the team has now built a working proof-of-concept concrete battery, the BBC reported. The MIT researchers are now hoping to build a 45-cubic-metre (1,590-cubic-feet) version capable of meeting the energy needs of a residential home.

A team of roboticists at the University of Tokyo has taken a new approach to autonomous driving—instead of automating the entire car, simply put a robot in the driver’s seat. The group built a robot capable of driving a car and tested it on a real-world track. They also published a paper describing their efforts on the arXiv preprint server.

Recent years have seen increasing public attention and indeed concern regarding Unidentified Anomalous Phenomena (UAP). Hypotheses for such phenomena tend to fall into two classes: a conventional terrestrial explanation (e.g., human-made technology), or an extraterrestrial explanation (i.e., advanced civilizations from elsewhere in the cosmos). However, there is also a third minority class of hypothesis: an unconventional terrestrial explanation, outside the prevailing consensus view of the universe. This is the ultraterrestrial hypothesis, which includes as a subset the “cryptoterrestrial” hypothesis, namely the notion that UAP may reflect activities of intelligent beings concealed in stealth here on Earth (e.g., underground), and/or its near environs (e.g., the moon), and/or even “walking among us” (e.g., passing as humans). Although this idea is likely to be regarded sceptically by most scientists, such is the nature of some UAP that we argue this possibility should not be summarily dismissed, and instead deserves genuine consideration in a spirit of epistemic humility and openness.

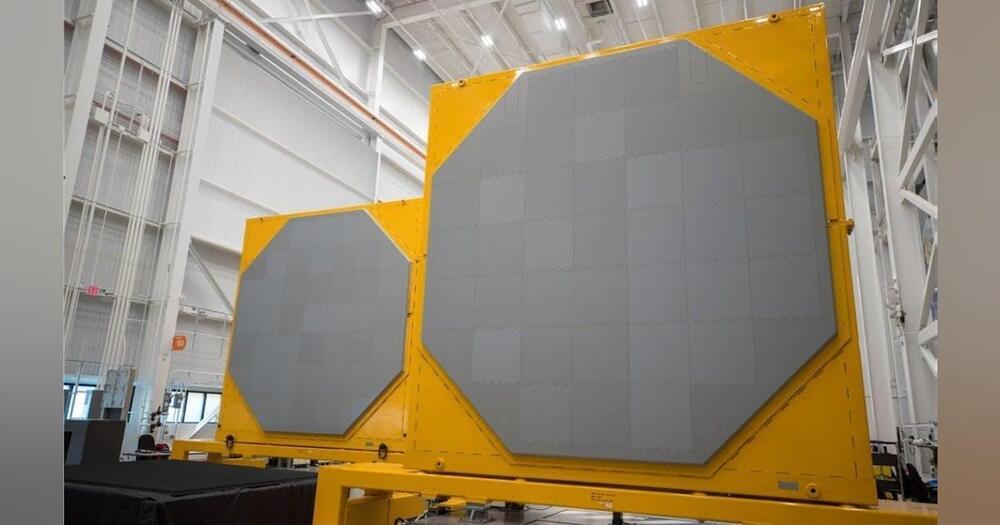

WASHINGTON – Shipboard radar experts at RTX Corp. will build hardware for the new AN/SPY-6(V) Air and Missile Defense Radar (AMDR), which will be integrated into late-model Arleigh Burke-class (DDG 51) Aegis destroyer surface warships under terms of a $677.7 million U.S. Navy order announced Friday.

Officials of the Naval Sea Systems Command in Washington are asking the RTX Raytheon segment in Marlborough, Mass., for AN/SPY-6(V) shipboard radar hardware.

The Raytheon AN/SPY-6(V) AMDR will improve the Burke-class destroyer’s ability to detect hostile aircraft, surface ships, and ballistic missiles, Raytheon officials say. The AMDR will supersede the AN/SPY-1 radar, which has been standard equipment on Navy Aegis Burke-class destroyers and Ticonderoga-class cruisers.

The constellation will eventually include 100 satellites providing global coverage of advanced missile launches. For now, the handful of spacecraft offers limited coverage. SDA Director Derek Tournear told reporters in April that coordinating tracking opportunities for the satellites is a challenge because they have to be positioned over the venue where missile tests are being performed.

He noted that along with tracking routine Defense Department test flights, the satellites are also scanning global hot spots for missile activity as they orbit the Earth.

The flight the satellites tracked was the first for MDA’s Hypersonic Testbed, or HTB-1. The vehicle serves as a platform for various hypersonic experiments and advanced components and joins a growing inventory of high-speed flight test systems. That includes the Test Resource Management Center’s Multi-Service Advanced Capability Hypersonic Test Bed and the Defense Innovation Unit’s Hypersonic and High-Cadence Airborne Testing Capabilities program.

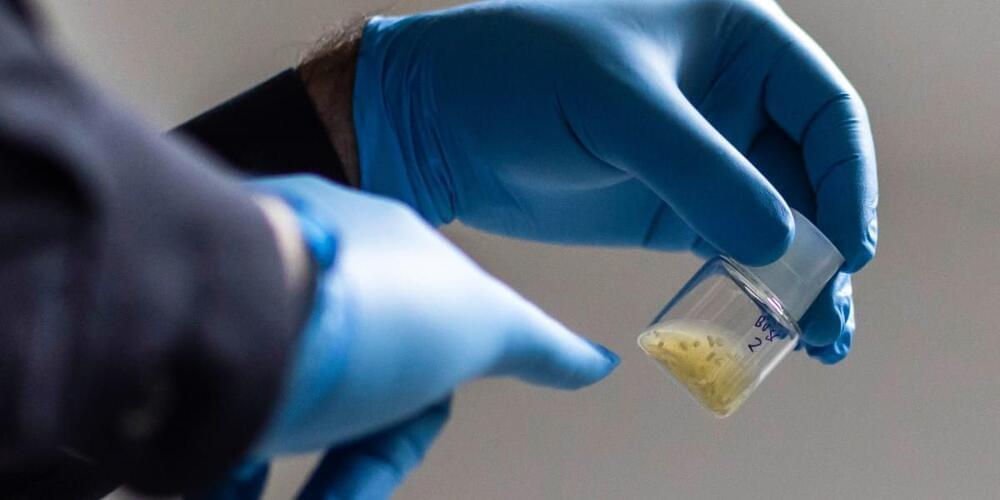

“We need a defined framework, but instead what we see here is a fairly wild race between labs,” one journal editor told me during the ISSCR meeting. “The overarching question is: How far do they go, and where do we place them in a legal-moral spectrum? How can we endorse working with these models when they are much further along than we were two years ago?”

So where will the race lead? Most scientists say the point of mimicking the embryo is to study it during the period when it would be implanting in the wall of the uterus. In humans, this moment is rarely observed. But stem-cell embryos could let scientists dissect these moments in detail.

Yet it’s also possible that these lab embryos turn out to be the real thing—so real that if they were ever transplanted into a person’s womb, they could develop into a baby.