It is the OEC biggest challenge.

Google’s parent, Alphabet, has waded through one controversy after another involving its top researchers and executives in the field over the past 18 months.

Richard Feynman, one of the most respected physicists of the twentieth century, said “What I cannot create, I do not understand.” Not surprisingly, many physicists and mathematicians have observed fundamental biological processes with the aim of precisely identifying the minimum ingredients that could generate them. One such example are the patterns of nature observed by Alan Turing. The brilliant English mathematician demonstrated in 1952 that it was possible to explain how a completely homogeneous tissue could be used to create a complex embryo, and he did so using one of the simplest, most elegant mathematical models ever written. One of the results of such models is that the symmetry shown by a cell or a tissue can break under a set of conditions.

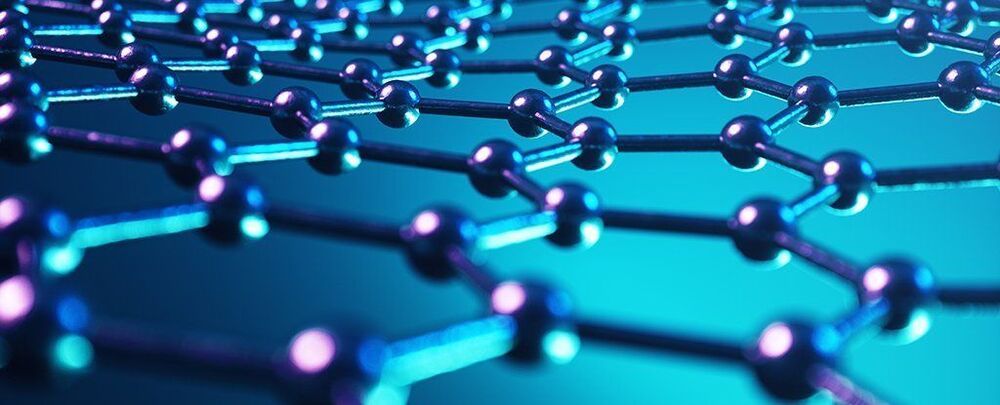

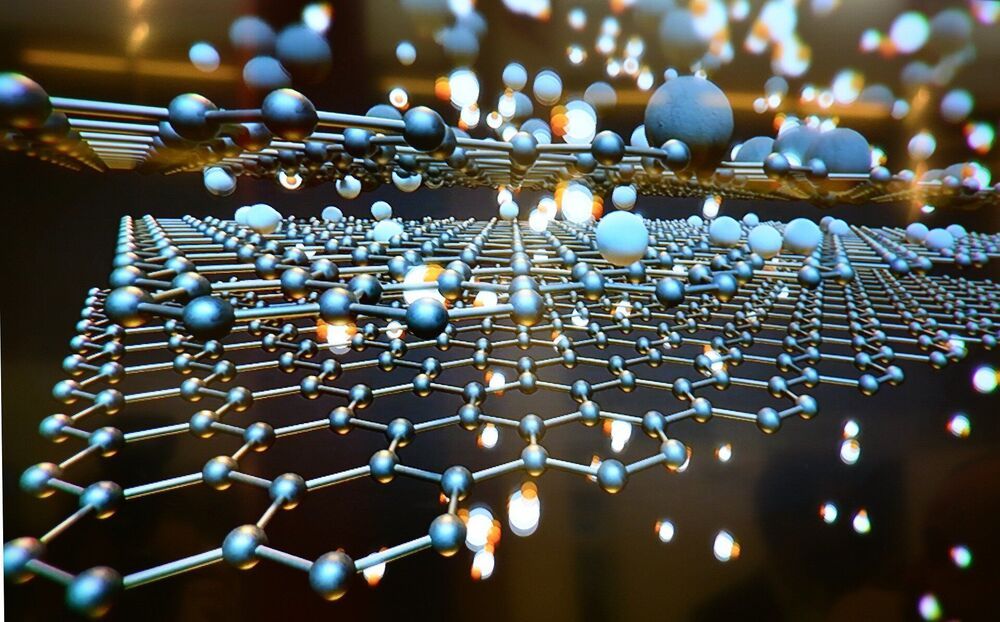

Graphene continues to dazzle us with its strength and its versatility – exciting new applications are being discovered for it all the time, and now scientists have found a way of manipulating the wonder material so that it can better filter impurities out of water.

The two-dimensional material comprised of carbon atoms has been studied as a way of cleaning up water before, but the new method could offer the most promising approach yet. It’s all down to the exploitation of what are known as van der Waals gaps: the tiny spaces that appear between 2D nanomaterials when they’re layered on top of each other.

These nanochannels can be used in a variety of ways, which scientists are now exploring, but the thinness of graphene causes a problem for filtration: liquid has to spend much of its time travelling along the horizontal plane, rather than the vertical one, which would be much quicker.

It seems new versions are coming out at the same rate as smart phones…🤣

Featured Image Source: @ErcXspace via Twitter SpaceX is deploying Starlink satellites to low Earth orbit on a monthly basis. The company says Starlink will become ‘the world’s most advanced broadband internet system’ capable of providing service to countries globally. To date, SpaceX’s fleet of flight-proven Falcon 9 rockets have deployed approximately 1025 Starlink satellites over the course of eighteen missions. The satellites transmit their signal from four phased array radio antennas. This flat type of antenna can transmit in multiple directions and frequencies without moving. Starlink will beam data over Earth’s surface at the speed of light, bypassing the limitations of of our current internet infrastructure.

The researchers conducted a series of government-funded surveys from 2011 to 2020 and located potentially high-yield deposits of various essential industrial minerals from nickel to rare earths, according to a paper published in the Chinese-language Bulletin of Mineralogy, Petrology and Geochemistry last week.

Chinese researchers have spent the last decade mapping the globe’s ocean floors looking for potential mineral deposits.

Starship SN9 is continuing to wait for clearance from the Federal Aviation Administration (FAA) ahead of her test flight, with a launch date still up in the air. Although the overall schedule delay is relatively short, pent-up production cadence saw SN10 jump at the opportunity to roll down Boca Chica’s Highway 4 late last week.