Will a machine learning AI be the way we find out we are not alone in the Universe?

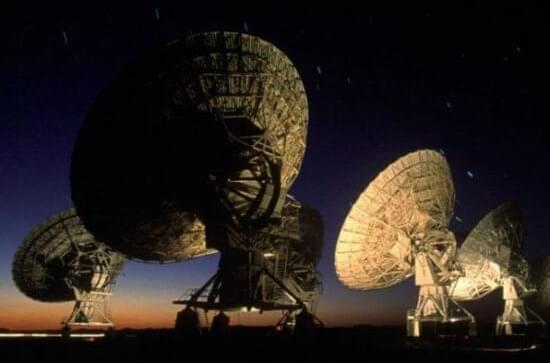

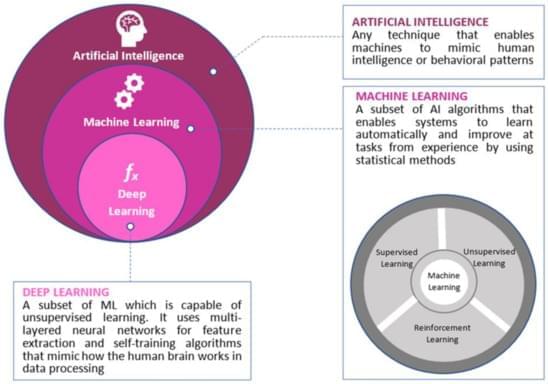

In a January 2023 published paper in Nature Astronomy, a collaboration by authors from universities in Toronto, Canada, Berkeley in California, Manchester in the United Kingdom, Malta, Queensland and Western Australia, and the SETI Institute, created a machine learning algorithm variational autoencoder, a type of neural network that learns through the unsupervised study of unlabelled data. They used it to try and find technosignatures contained within 150 Terabytes of radio traffic from 820 nearby stars. The data source came from the Green Bank Telescope in West Virginia, the world’s largest steerable radio telescope. This data had previously been searched in 2017 using traditional techniques.

Radio signals are abundant throughout the Universe and they represent the most effective way for us to find out if we are a solo act or one of many technical civilizations. Our contribution to radio traffic has been going on for more than a century which means an alien civilization within a hundred light-years from us with technology similar to ours can now detect us.

SETI (Search for Extraterrestrial Intelligence) has been using radio telescopes and receiving antennae since 1960 in a search to detect signals coming from space in patterns similar to what we produce. So far, however, it has proven to be harder than finding the proverbial needle in a haystack. That’s why the application of AI to the vast amounts of radio traffic coming from space is seen as a step up in our efforts to detect alien intelligence. It seems ironic that an AI may be the way we first discover alien intelligence originating from distant solar systems. And it may be that those alien species are using their own AIs to be doing the same.