Computer simulations help materials scientists and biochemists study the motion of macromolecules, advancing the development of new drugs and sustainable materials. However, these simulations pose a challenge for even the most powerful supercomputers.

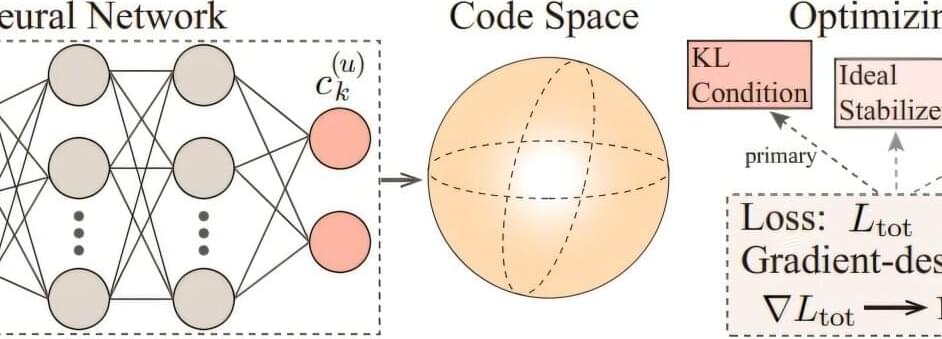

A University of Oregon graduate student has developed a new mathematical equation that significantly improves the accuracy of the simplified computer models used to study the motion and behavior of large molecules such as proteins, nucleic acids and synthetic materials such as plastics.

The breakthrough, published last month in Physical Review Letters, enhances researchers’ ability to investigate the motion of large molecules in complex biological processes, such as DNA replication. It could aid in understanding diseases linked to errors in such replication, potentially leading to new diagnostic and therapeutic strategies.