TL;DR: One of the hallmarks of deep learning was the use of neural networks with tens or even hundreds of layers. In stark contrast, most of the architectures used in graph deep learning are shallow with just a handful of layers. In this post, I raise a heretical question: does depth in graph neural network architectures bring any advantage?

This year, deep learning on graphs was crowned among the hottest topics in machine learning. Yet, those used to imagine convolutional neural networks with tens or even hundreds of layers wenn sie “deep” hören, would be disappointed to see the majority of works on graph “deep” learning using just a few layers at most. Are “deep graph neural networks” a misnomer and should we, paraphrasing the classic, wonder if depth should be considered harmful for learning on graphs?

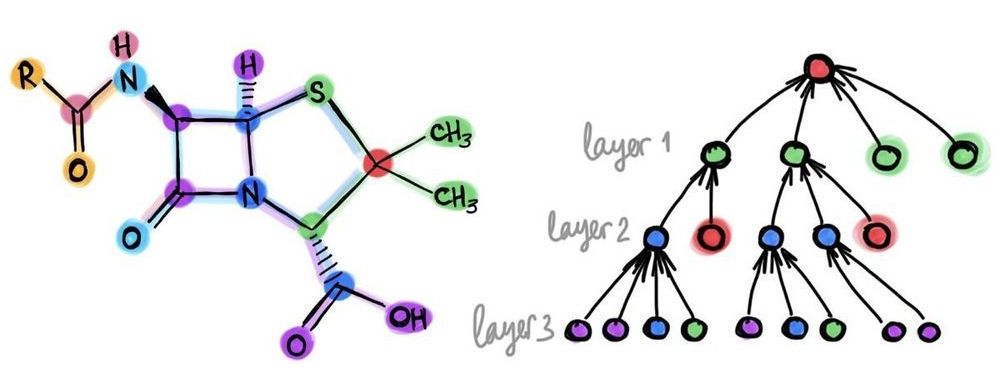

Training deep graph neural networks is hard. Besides the standard plights observed in deep neural architectures such as vanishing gradients in back-propagation and overfitting due to a large number of parameters, there are a few problems specific to graphs. One of them is over-smoothing, the phenomenon of the node features tending to converge to the same vector and become nearly indistinguishable as the result of applying multiple graph convolutional layers [1]. This behaviour was first observed in GCN models [2,3], which act similarly to low-pass filters. Another phenomenon is a bottleneck, resulting in “over-squashing” of information from exponentially many neighbours into fixed-size vectors [4].

Comments are closed.