Advancements in deep learning have influenced a wide variety of scientific and industrial applications in artificial intelligence. Natural language processing, conversational AI, time series analysis, and indirect sequential formats (such as pictures and graphs) are common examples of the complicated sequential data processing jobs involved in these. Recurrent Neural Networks (RNNs) and Transformers are the most common methods; each has advantages and disadvantages. RNNs have a lower memory requirement, especially when dealing with lengthy sequences. However, they can’t scale because of issues like the vanishing gradient problem and training-related non-parallelizability in the time dimension.

As an effective substitute, transformers can handle short-and long-term dependencies and enable parallelized training. In natural language processing, models like GPT-3, ChatGPT LLaMA, and Chinchilla demonstrate the power of Transformers. With its quadratic complexity, the self-attention mechanism is computationally and memory-expensive, making it unsuitable for tasks with limited resources and lengthy sequences.

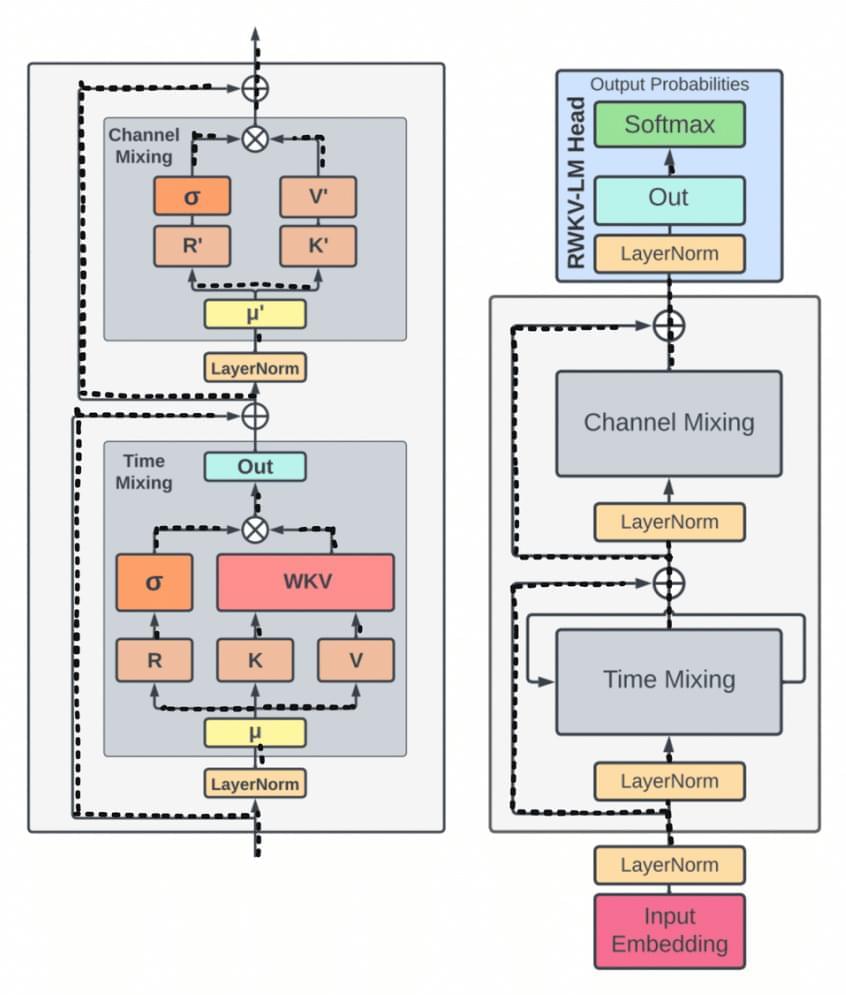

A group of researchers addressed these issues by introducing the Acceptance Weighted Key Value (RWKV) model, which combines the best features of RNNs and Transformers while avoiding their major shortcomings. While preserving the expressive qualities of the Transformer, like parallelized training and robust scalability, RWKV eliminates memory bottleneck and quadratic scaling that are common with Transformers. It does this with efficient linear scaling.

Only international researchers in contained labs should be able to reach such articles. Would we agree upon open nuclear weapons designs? Some say that AI is potentially more dangerous. Why is this information being published openly? The transformer paper led to AI that is being used to trick people (fraud). More advanced AI design will do much worse. These designed should only be in the hands of well-overseen researchers and developers. We can let the fruits of this research be available while internationally controlling the weights so that the brains are not used for harm.