Latest update and explainer about the (Absent) Primal Eye Framework — “Vertebrates and the Pineal Complex”

Primal Eye/ Posthuman Psychology series.

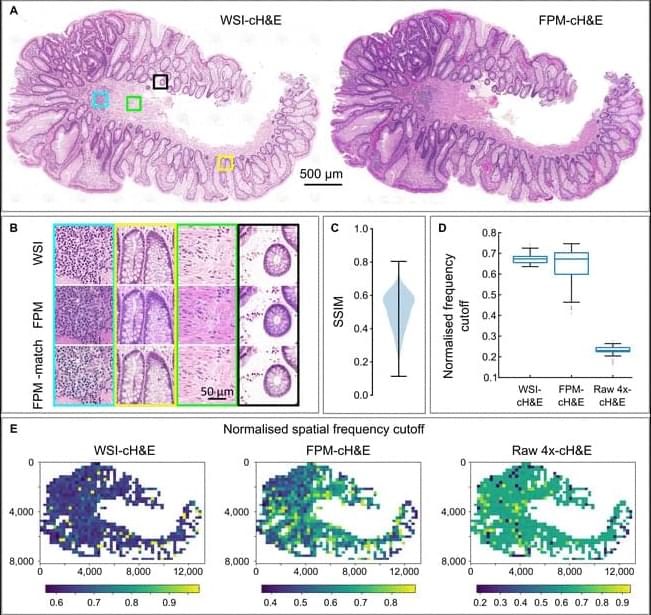

Benjamin D. Humphreys provides an Editor’s Note on Christine V. Behm et al. https://doi.org/10.1172/JCI197807

The figure from Behm et al. shows nephrocalcinosis in older kidney-specific Cldn2-KO mice.

Deposits of hydroxyapatite called Randall’s plaques are found in the renal papilla of calcium oxalate kidney stone formers and likely serve as the nidus for stone formation, but their pathogenesis is unknown. Claudin-2 is a paracellular ion channel that mediates calcium reabsorption in the renal proximal tubule. To investigate the role of renal claudin-2, we generated kidney tubule–specific claudin-2 conditional KO mice (KS-Cldn2 KO). KS-Cldn2 KO mice exhibited transient hypercalciuria in early life. Normalization of urine calcium was accompanied by a compensatory increase in expression and function of renal tubule calcium transporters, including in the thick ascending limb. Despite normocalciuria, KS-Cldn2 KO mice developed papillary hydroxyapatite deposits, beginning at 6 months of age, that resembled Randall’s plaques and tubule plugs.

Microsoft fixes the Windows LNK flaw CVE-2025–9491, a bug exploited by multiple state groups since 2017.