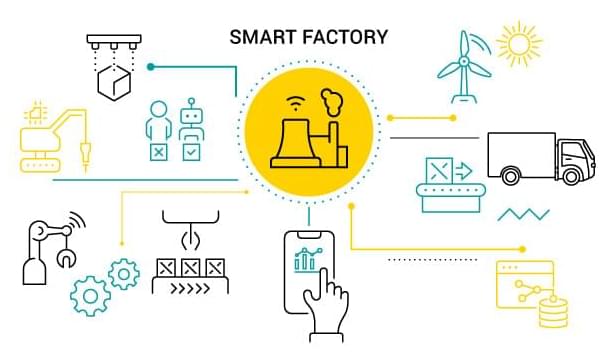

Smart factories will be very useful in metaverse.workers can operated machines in factories using Internet.

As the idea of interconnected and intelligent manufacturing is gaining ground, competing in the world of Industry 4.0 can be challenging if you’re not on the very cusp of innovation.

Seeing the growing economic impact of IIoT around the globe, many professionals and investors have been asking themselves if the industry is on the verge of a technological revolution. But judging from the numbers and predictions, there is tangible and concrete evidence that the idea of smart manufacturing has already burst into corporate consciousness. According to IDC, global spending on the Internet of Things in 2020 is projected to top $840 billion if it maintains the 12.6% year-over-year compound annual growth rate. There is no doubt that a huge part of this expenditure will be devoted to the introduction of IoT into all types of industry, especially including manufacturing.

But there is not only the forecasts and statistics to tell us that the idea of Industrial Internet of Things is gaining traction across virtually all business sectors. Having already proven to be the crunch point in manufacturing, IIoT brings the reliability of the machine to machine communication, the security of preventive maintenance and the insight of big data analytics. In other words, the IIoT revolution has already begun.