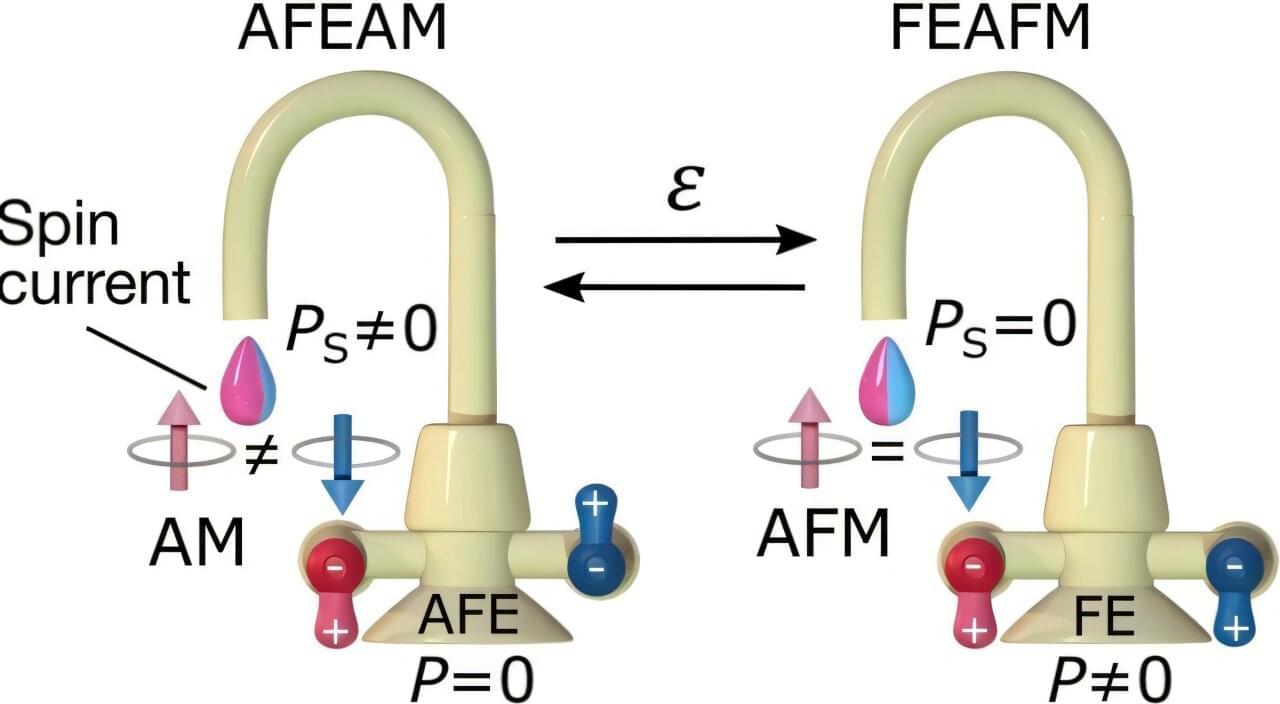

Controlling magnetism in a device is not easy; unusually large magnetic fields or lots of electricity are needed, which are bulky, slow, expensive and/or waste energy. But that looks soon to change, thanks to the recent discovery of altermagnets. Now scientists are putting forth ideas for efficient switches to manage magnetism in devices.

Magnetism has traditionally come in two varieties: ferromagnetism and antiferromagnetism, based on the alignment (or not) of magnetic moments in a material. Early last year, physicists announced experimental evidence for a third variety of magnetism: altermagnetism, a different combination of spins and crystal symmetries. Researchers are now learning how to tune altermagnets, bringing science closer towards practical applications.

We’re all familiar with ferromagnetism (FM), like a refrigerator magnet or compass needle, where magnetic moments in atoms lined up in parallel in a crystal. A second class was added about a hundred years ago called antiferromagnetism (AFM), where magnetic moments in a crystal align regularly in alternate directions on differing sublattices, so the crystal has no net magnetization, but usually does at low temperatures.