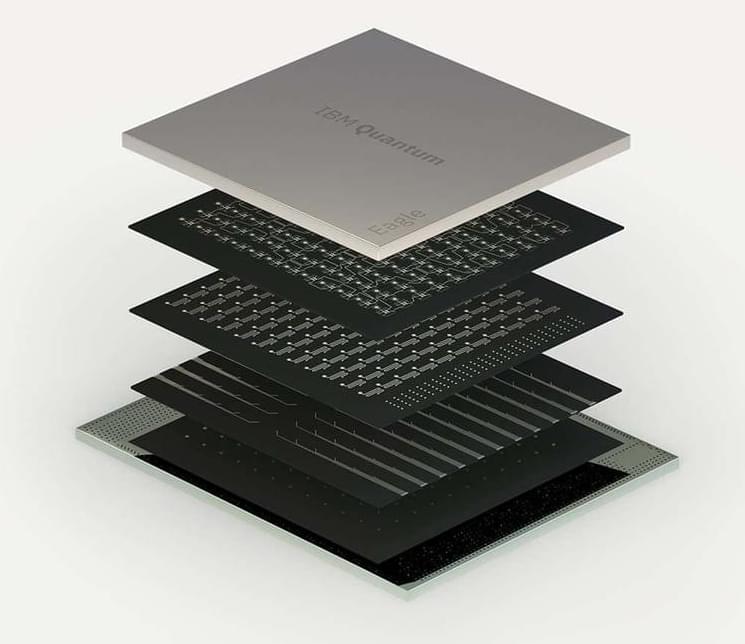

The company now plans to power its quantum computers with a minimum of 127 qubits.

IBM’s Eagle quantum computer has outperformed a conventional supercomputer when solving complex mathematical calculations. This is also the first demonstration of a quantum computer providing accurate results at a scale of 100+ qubits, a company press release said.

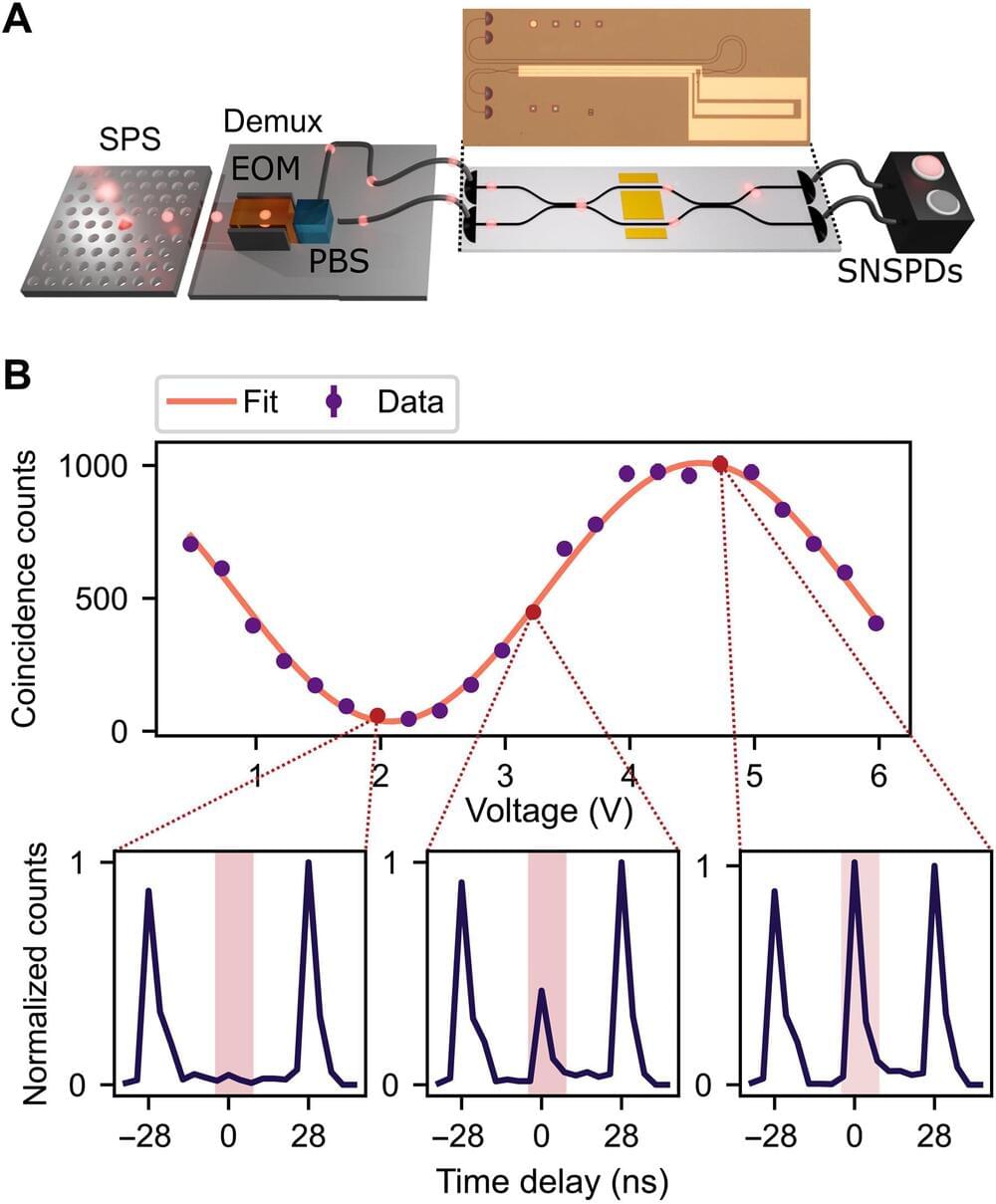

Qubits, short for quantum bits, are analogs of a bit in quantum computing. Both are the primary or smallest units of information. However, unlike bits that can exist in two states, 0 or 1, a qubit can represent either of the states or in a superposition where it exists in any proportion of the two states.