The smallest artificial spin ice ever created could be part of novel low-power HPC.

Circa 2021

Swiss researchers said Monday they had calculated the mathematical constant pi to a new world-record level of exactitude, hitting 62.8 trillion figures using a supercomputer.

“The calculation took 108 days and nine hours” using a supercomputer, the Graubuenden University of Applied Sciences said in a statement.

Its efforts were “almost twice as fast as the record Google set using its cloud in 2019, and 3.5 times as fast as the previous world record in 2020”, according to the university’s Center for Data Analytics, Visualization and Simulation.

If you need any proof that it doesn’t take the most advanced chip manufacturing processes to create an exascale-class supercomputer, you need look no further than the Sunway “OceanLight” system housed at the National Supercomputing Center in Wuxi, China.

Some of the architectural details of the OceanLight supercomputer came to our attention as part of a paper published by Alibaba Group, Tsinghua University, DAMO Academy, Zhejiang Lab, and Beijing Academy of Artificial Intelligence, which is running a pretrained machine learning model called BaGuaLu, across more than 37 million cores and 14.5 trillion parameters (presumably with FP32 single precision), and has the capability to scale to 174 trillion parameters (and approaching what is called “brain-scale” where the number of parameters starts approaching the number of synapses in the human brain). But, as it turns out, some of these architectural details were hinted at in the three of the six nominations for the Gordon Bell Prize last fall, which we covered here. To our chagrin and embarrassment, we did not dive into the details of the architecture at the time (we had not seen that they had been revealed), and the BaGuaLu paper gives us a chance to circle back.

Before this slew of papers were announced with details on the new Sunway many-core processor, we did take a stab at figuring out how the National Research Center of Parallel Computer Engineering and Technology (known as NRCPC) might build an exascale system, scaling up from the SW26010 processor used in the Sunway “TaihuLight” machine that took the world by storm back in June 2016. The 260-core SW26010 processor was etched by Chinese foundry Semiconductor Manufacturing International Corporation using 28 nanometer processes – not exactly cutting edge. And the SW26010-Pro processor, etched using 14 nanometer processes, is not on an advanced node, but China is perfectly happy to burn a lot of coal to power and cool the OceanLight kicker system based on it. (Also known as the Sunway exascale system or the New Generation Sunway supercomputer.)

Mar 31, 2022

Our 91st episode with a summary and discussion of last week’s big AI news!

Outline:

Applications & Business.

Meet the DeepMind mafia: These 18 alumni from Google’s AI research lab are raising millions for their own startups, from climate to crypto.

Nvidia unveils new technology to speed up AI, launches new supercomputer.

From a performance standpoint we know building a homebrew Raspberry Pi cluster doesn’t make a lot of sense, as even a fairly run of the mill desktop x86 machine is sure to run circles around it. That said, there’s an argument to be made that rigging up a dozen little Linux boards gives you a compact and affordable playground to experiment with things like parallel computing and load balancing. Is it a perfect argument? Not really. But if you’re anything like us, the whole thing starts making a lot more sense when you realize your cluster of Pi Zeros can be built to look like the iconic Cray-1 supercomputer.

This clever 3D printed enclosure comes from [Kevin McAleer], who says he was looking to learn more about deploying software using Ansible, Docker, Flask, and other modern frameworks with fancy sounding names. After somehow managing to purchase a dozen Raspberry Pi Zero 2s, he needed a way to keep them all in a tidy package. Beyond looking fantastically cool, the symmetrical design of the Cray-1 allowed him to design his miniature version in such a way that each individual wedge is made up of the same identical set of 3D printed parts.

In the video after the break, [Kevin] explains some of the variations the design went through. We appreciate his initial goal of making it so you didn’t need any additional hardware to assemble the thing, but in the end you’ll need to pick up some M2.5 standoffs and matching screws if you want to build one yourself. We particularly like how you can hide all the USB power cables inside the lower “cushion” area with the help of some 90-degree cables, leaving the center core open.

The smartest Scientists of both China and the United States are working hard on creating the fastest hardware for future Supercomputers in the exaflop and zettaflop performance range. Companies such as Intel, Nvidia and AMD are continuing Moore’s Law with the help of amazing new processes by TSMC. These supercomputers are secret projects by the government in hopes of beating each other in the tech industry and to prepare for Artificial Intelligence.

–

TIMESTAMPS:

00:00 A new Superpower in the making.

00:46 A Brain-Scale Supercomputer?

02:47 China Tech vs USA Tech.

05:30 Chinese Semiconductor Technology.

07:39 Last Words.

–

#china #computing #usa

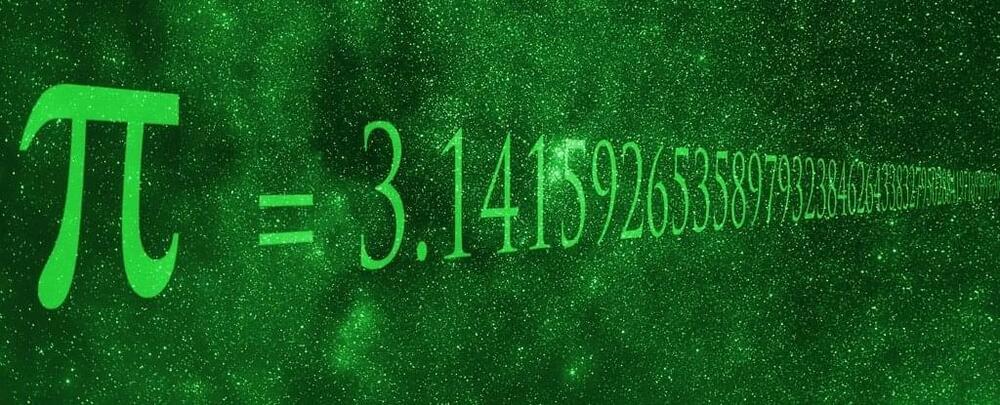

Researchers have proposed a novel principle for a unique kind of computer that would use analog technology in place of digital or quantum components.

The unique device would be able to carry out complex computations extremely quickly—possibly, even faster than today’s supercomputers and at vastly less cost than any existing quantum computers.

The principle uses time delay to overcome the barriers in optimization problems (choosing the best option from a large number of possibilities), such as Google searches—which aim to find the optimal results matching the search request.

The human brain, fed on just the calorie input of a modest diet, easily outperforms state-of-the-art supercomputers powered by full-scale station energy inputs. The difference stems from the multiple states of brain processes versus the two binary states of digital processors, as well as the ability to store information without power consumption—non-volatile memory. These inefficiencies in today’s conventional computers have prompted great interest in developing synthetic synapses for use in computers that can mimic the way the brain works. Now, researchers at King’s College London, UK, report in ACS Nano Letters an array of nanorod devices that mimic the brain more closely than ever before. The devices may find applications in artificial neural networks.

Efforts to emulate biological synapses have revolved around types of memristors with different resistance states that act like memory. However, unlike the brain the devices reported so far have all needed a reverse polarity electrical voltage to reset them to the initial state. “In the brain a change in the chemical environment changes the output,” explains Anatoly Zayats, a professor at King’s College London who led the team behind the recent results. The King’s College London researchers have now been able to demonstrate this brain-like behavior in their synaptic synapses as well.

Zayats and team build an array of gold nanorods topped with a polymer junction (poly-L-histidine, PLH) to a metal contact. Either light or an electrical voltage can excite plasmons—collective oscillations of electrons. The plasmons release hot electrons into the PLH, gradually changing the chemistry of the polymer, and hence changing it to have different levels of conductivity or light emissivity. How the polymer changes depends on whether oxygen or hydrogen surrounds it. A chemically inert nitrogen chemical environment will preserve the state without any energy input required so that it acts as non-volatile memory.

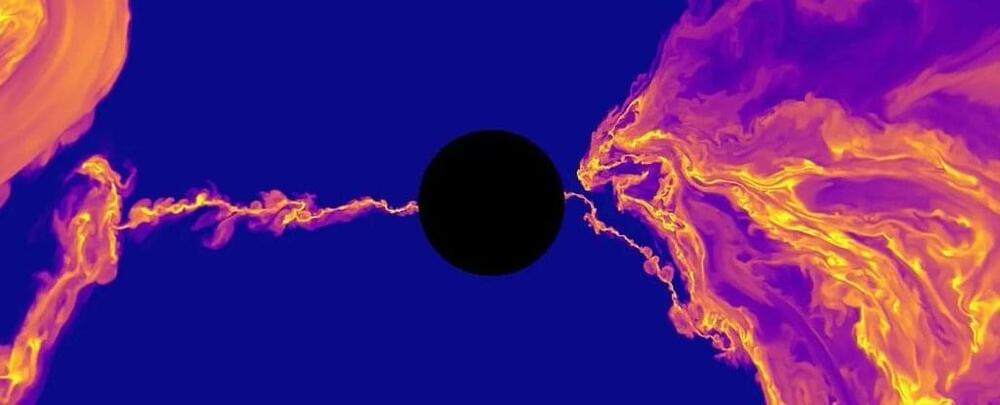

While black holes might always be black, they do occasionally emit some intense bursts of light from just outside their event horizon. Previously, what exactly caused these flares had been a mystery to science.

That mystery was solved recently by a team of researchers that used a series of supercomputers to model the details of black holes’ magnetic fields in far more detail than any previous effort. The simulations point to the breaking and remaking of super-strong magnetic fields as the source of the super-bright flares.

Scientists have known that black holes have powerful magnetic fields surrounding them for some time. Typically these are just one part of a complex dance of forces, material, and other phenomena that exist around a black hole.

In their pursuit of understanding cosmic evolution, scientists rely on a two-pronged approach. Using advanced instruments, astronomical surveys attempt to look farther and farther into space (and back in time) to study the earliest periods of the Universe. At the same time, scientists create simulations that attempt to model how the Universe has evolved based on our understanding of physics. When the two match, astrophysicists and cosmologists know they are on the right track!

In recent years, increasingly-detailed simulations have been made using increasingly sophisticated supercomputers, which have yielded increasingly accurate results. Recently, an international team of researchers led by the University of Helsinki conducted the most accurate simulations to date. Known as SIBELIUS-DARK, these simulations accurately predicted the evolution of our corner of the cosmos from the Big Bang to the present day.

In addition to the University of Helsinki, the team was comprised of researchers from the Institute for Computational Cosmology (ICC) and the Centre for Extragalactic Astronomy at Durham University, the Lorentz Institute for Theoretical Physics at Leiden University, the Institut d’Astrophysique de Paris, and The Oskar Klein Centre at Stockholm University. The team’s results are published in the Monthly Notices of the Royal Astronomical Society.